Abstract

Introduction: The use of chatbots in healthcare is an area of study receiving increased academic interest. As the knowledge base grows, the granularity in the level of research is being refined. There is now more targeted work in specific areas of healthcare, for example, chatbots for anxiety and depression, cancer care, and pregnancy support. The aim of this paper is to systematically review and summarize the research conducted on the use of chatbots in the field of addiction, specifically the use of chatbots as supportive agents for those who suffer from a substance use disorder (SUD). Methods: A systematic search of scholarly databases using the broad search criteria of (“drug” OR “alcohol” OR “substance”) AND (“addiction” OR “dependence” OR “misuse” OR “disorder” OR “abuse” OR harm*) AND (“chatbot” OR “bot” OR “conversational agent”) with an additional clause applied of “publication date” ≥ January 01, 2016 AND “publication date” ≤ March 27, 2022, identified papers for screening. The Preferred Reporting Items for Systematic Reviews and Meta-Analyses guidelines were used to evaluate eligibility for inclusion in the study, and the Mixed Methods Appraisal Tool was employed to assess the quality of the papers. Results: The search and screening process identified six papers for full review, two quantitative studies, three qualitative, and one mixed methods. The two quantitative papers considered an adaptation to an existing mental health chatbot to increase its scope to provide support for SUD. The mixed methods study looked at the efficacy of employing a bespoke chatbot as an intervention for harmful alcohol use. Of the qualitative studies, one used thematic analysis to gauge inputs from potential users, and service professionals, on the use of chatbots in the field of addiction, based on existing knowledge, and envisaged solutions. The remaining two were useability studies, one of which focussed on how prominent chatbots, such as Amazon Alexa, Apple Siri, and Google Assistant can support people with an SUD and the other on the possibility of delivering a chatbot for opioid-addicted patients that is driven by existing big data. Discussion/Conclusion: The corpus of research in this field is limited, and given the quality of the papers reviewed, it is suggested more research is needed to report on the usefulness of chatbots in this area with greater confidence. Two of the papers reported a reduction in substance use in those who participated in the study. While this is a favourable finding in support of using chatbots in this field, a strong message of caution must be conveyed insofar as expert input is needed to safely leverage existing data, such as big data from social media, or that which is accessed by prevalent market leading chatbots. Without this, serious failings like those highlighted within this review mean chatbots can do more harm than good to their intended audience.

Introduction

In recent years, social engagement powered by technology has undergone significant growth, both in solution availability and scale of uptake. Here, social media platforms such as Facebook, TikTok, Snapchat, and Instagram have facilitated an increase in technology-based social interaction using applications that employ a messaging interface. Understandably, this has generated interest in how this technology can be used to benefit health and wellbeing [1-3]. In part, this has been a response to the COVID-19 pandemic where alternative delivery mechanisms were necessary to maintain service provision within the health sector [4], but also a reflection of the contemporary technological landscape, and an ongoing commitment to better connect services with a technology-motivated population [4, 5]. Furthermore, the burgeoning use of communication platforms such as Zoom and Microsoft Teams, a result of face-to-face meetings being restricted during the pandemic, has seen people become accustomed to and comfortable with support services being delivered using digital solutions [4].

For some, such as those with a history of addiction, the restrictions enforced during the pandemic have seen an emergent dependence on technology, with mutual support meetings such as Alcoholics Anonymous being conducted via Zoom, along with the amplified use of support groups on social media platforms, such as Facebook [6]. This newly adopted model of support has enriched the existing online provision accessed by this cohort, where web-based interventions such as chatbots, online counselling, and social media groups, have all been considered as sources of support [7, 8]. These systemized solutions have addressed different aspects of treatment and care for people with a history of substance misuse, including preventative interventions, health education, reduction plans, treatment programmes, and recovery support [9]. Examples of these support options are the “drink aware” website housing tools to help people change their relationship with alcohol [10], the “breaking free” online programme providing recovery support [11], and the many social media groups set up to facilitate mutual support or disseminate addiction services to a wide audience, often with engagement from healthcare professionals [12].

Over the past 5 years, chatbots have become a well-established branch in Human-computer interaction (HCI), digitizing services traditionally undertaken by a human agent [1]. In 2016, Facebook announced its intention to integrate chatbot functionality with its messenger platform [6]. The subsequent amalgamation of chatbot and social media platform provided a stimulus for the rapid growth in chatbot solutions across many sectors [1, 2]. Chatbots use natural language processing, a branch of artificial intelligence, to emulate a real-time conversation. They facilitate the delivery of a dialogue that is both sociable and engaging to end users, therefore making it a popular choice in HCI design [13]. In addition to systemizing social engagement, chatbots have succeeded because they offer a higher level of intelligence in directing users to helpful content than a search facility is capable of. They also increase productivity whereby they reach a greater audience than possible from non-automated conversations and can be programmed to deliver a broad range of solutions, limited only by the imagination and complexity of the syntactical rules that can be systemized [1, 14].

In the health care sector, chatbots have been used to educate, support, treat, and diagnose people [15] with diverse medical needs, such as depression, insomnia, and obesity [16]. The current interest in healthcare chatbots being evident in a Google Scholar search for publications in the last 5 years using the term “chatbot AND healthcare.” Here, a year-on-year increase was noted, with 89 papers returned for 2016 and 3,360 for 2021. The most significant increase being in 2020 and 2021, the years spanning the COVID-19 pandemic. Within this growing body of knowledge, the efficacy and reputation of chatbots in healthcare has been considered [3, 15], along with the ethical implications of using a systematic agent, instead of a human, in a supporting role [17]. Much of the work published has considered chatbots and healthcare in general terms, for example, chatbots for therapy, chatbots for education, and chatbots for diagnosis [18]. As the knowledge base grows, the granularity in the level of research is being refined, seeing more research in targeted areas, for example, anxiety and depression [19], cancer care [20], and pregnancy support [21]. The growth in the applied use of chatbots means there is an increasing need for a better-evidenced study of their usefulness [1]. This is especially true in healthcare, where it is important to understand what affect they have on health outcomes in the long and short term [18].

In the area of drug and alcohol addiction, the mode of interaction exercised by chatbots presents an opportunity to help people suffering from a substance use disorder (SUD) [7]. The computational discourse exercised by chatbots means a person does not need to feel judged in context of their own guilt, shame, or embarrassment [1, 16], all commonplace with SUD [22]. Furthermore, the stigmatization experienced by people with SUD can prevent them from seeking help [23]. Chatbots are able to remove this barrier by providing the opportunity for a person to be heard without fear of being judged as an individual with faults [23]. As they operate online, they are accessible at the time of need when other support options may not be available [9]. For people with an SUD, having accessible support can be important in managing the symptomology, where the unpredictable nature of a triggering event can make maintaining abstinence more challenging [24]. Furthermore, chatbots have endless patience, so previous failed attempts at maintaining abstinence do not need to deter people from accessing this model of support [25].

Methods

Aim

The aim of this study is to understand how chatbots have been used to offer support to people with an SUD. The scope has been confined to drugs and alcohol, both substances with a pharmacological component that can affect perception, mood, consciousness, cognition, or behaviour. As such, the remit of this study excludes chatbots that target nicotine or behavioural addictions such as gambling. The purpose of which is to focus on how chatbot technology has been used to support a population exposed to some of the consequences of SUD, for example, physical withdrawal, impulsive behaviour, and impaired judgement [24]. This work was initiated as part of a larger project to implement a new chatbot solution. This solution was co-produced with prospective users to provide a different type of digital support to people in recovery from drug and alcohol addiction. The output from the present study provided important input to the design process for this bespoke chatbot solution, and as such, it is envisioned that it could be used in other such projects looking to expand this type of digital support in this area of healthcare [25, 26].

Search Strategy and Paper Selection

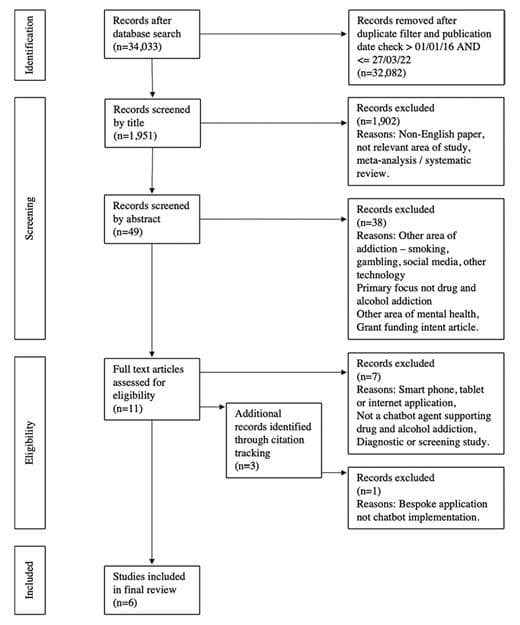

The Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) statement provides a framework for conducting systematic reviews [27]. It was developed to help facilitate comprehensive and transparent reporting of quantitative search results and has been adopted as a standardized system for identifying studies to be included in systematic reviews [28]. In conformity with this, PRISMA has been employed in the present study, to clearly convey the number of papers identified, along with their eligibility in the review process. To support this process, the PRISMA 2020 statement was used which provides a checklist containing the items considered necessary for reporting a systematic review [28].

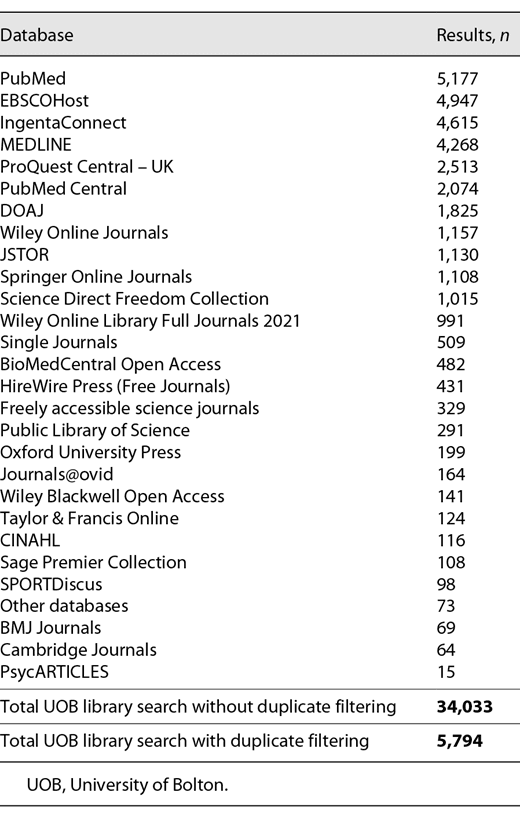

A literature search was conducted using Discover@Bolton, a library search facility available at the University of Bolton. This search facility trawls scholarly journals and databases for publications matching a given search term within apposite disciplines, for example, public health, psychology, computer science, social and applied sciences, and social welfare. The case insensitive search term (“drug” OR “alcohol” OR “substance”) AND (“addiction” OR “dependence” OR “misuse” OR “disorder” OR “abuse” OR harm*) AND (“chatbot” OR “bot” OR “conversational agent”) was used with an additional clause of “publication date” ≥ January 01, 2016 AND “publication date” ≤ March 27, 2022. The search included titles, abstracts, and full-text content. The reported results for this review are accurate on and up to March 27, 2022, the date the search was carried out. The number of results by data source is shown in Table 1.

Table 1.

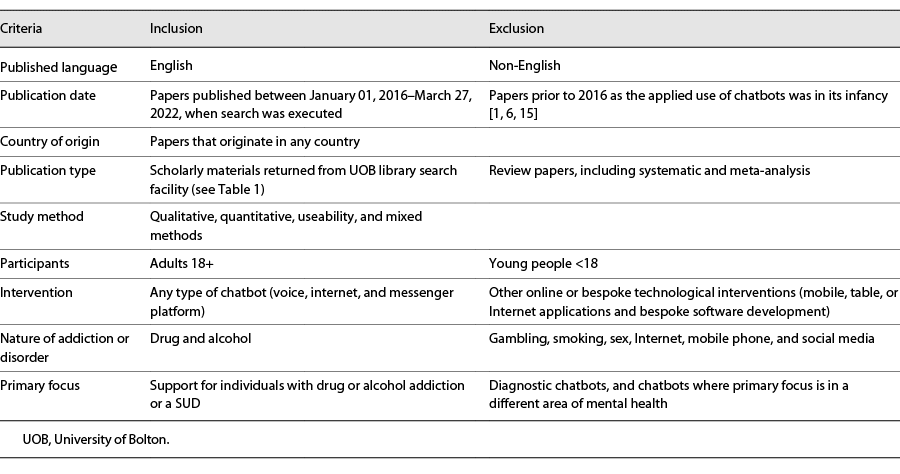

Inclusion and Exclusion Criteria

Papers returned in a search of the named data sources shown in Table 1 were considered eligible for review if they met the inclusion and exclusion criteria in Table 2. Papers from other sources, or non-empirical and popular contributions on the use of chatbots in addiction, were excluded as the content could not be accurately assessed as part of a systematic review, examples being an unstudied implementation and a recent expert commentary [7, 25, 26].

Table 2.

Quality Assessment

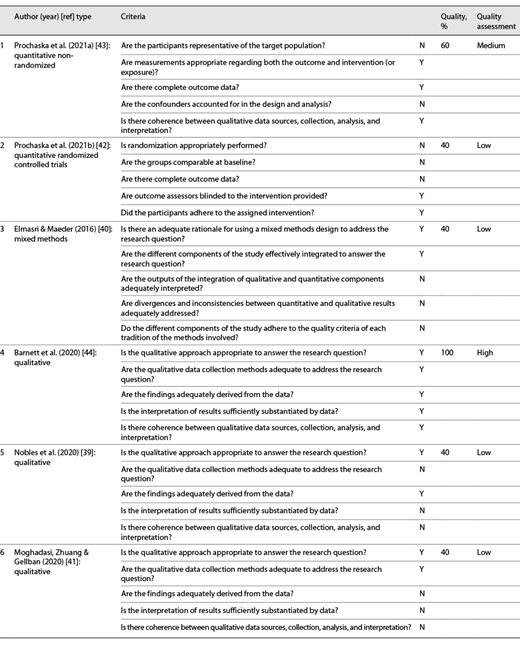

To ensure consistency in the quality assessment of the papers, the initial assessment was undertaken by the same researcher. For quality purposes, a further two reviewers then independently validated this process. The assessment was performed using the latest version of the Mixed Methods Appraisal Tool (MMAT) [29, 30]. This is a tool designed to facilitate systematic and concomitant appraisal of empirical studies that combine different research designs, specifically, qualitative, quantitative, and mixed methods. The MMAT has been shown as an efficient and reliable way to appraise the quality of papers, having been carefully critiqued and subsequently augmented since its original form [29-31]. The tool consists of methodological quality criteria, appropriate to the type of paper, for example, for qualitative papers, reviewers consider if the interpretation of the results is substantiated by the data presented. For quantitative papers, questions are asked which consider such things as, if the measures used are appropriate, or if the sample represents the intended population [29, 30].

Results

Papers Eligible for Inclusion

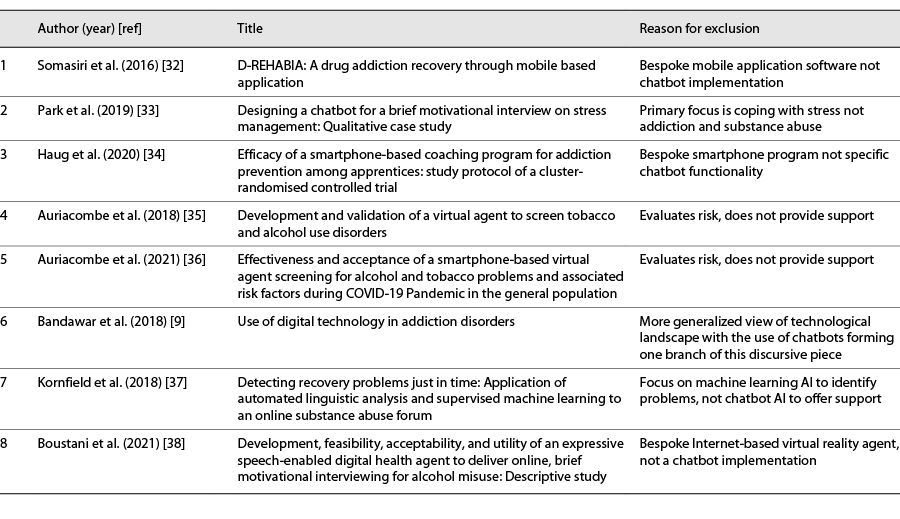

The total number of papers returned by the previously discussed search term was 34,033. Table 1 reports this by the individual data source queried. Duplicates and records before the requisite publication date were removed (n = 32,082), leaving 1,951 papers. These papers were title screened which saw (n = 1,902) studies excluded as not being relevant, published in a language other than English, or being a meta-analysis or review paper. The abstracts of the remaining studies (n = 49) were assessed for eligibility. Of these, papers were excluded (n = 38) if they were concerned with a different area of addiction (smoking, gambling, or technology), if their primary focus was not drug and alcohol addiction or SUD, or if they studied a different area of mental health. This saw 11 papers progress to full review, which yielded a further 3 studies through citation tracking [32-38]. The full-text of the accumulated 14 papers was considered. Of these, 8 were excluded as either diagnostic, not supporting problem drug and alcohol use, or being a bespoke software development as opposed to a chatbot implementation, see Table 3. This included Internet, tablet, and Internet-based content.

Table 3.

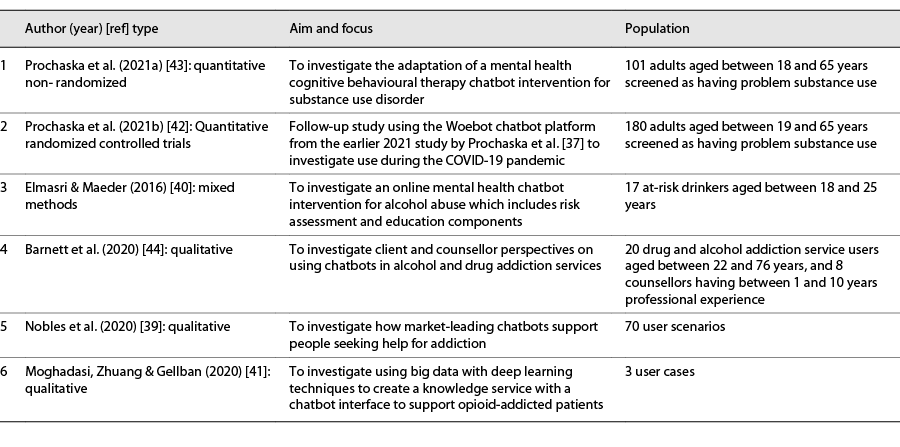

This process left a total of 6 papers eligible for review, as shown in the PRISMA diagram in Figure 1. The papers excluded through the full-text assessment against the inclusion and exclusion criteria are listed in Table 3. The characteristics of each of the qualifying papers are shown in Table 4.

Table 4.

Fig. 1.

PRISMA diagram showing flow of information in the search and screening process.

Quality Assessment Outcomes

The outputs from the PRISMA process (n = 6) were quality assessed using the previously described MMAT. Using the criteria set out in the MMAT framework [22, 23], the papers were given a percentage quality score based on the number of criteria met. A percentage of 0, 20, or 40% was considered low quality, 60 or 80% was considered medium, and above 80% was considered high. Of the papers within this review, when evaluated against the study-specific quality criteria, four were considered low quality [39-42], one as medium [43], and one as high [44], see Table 5. In addition to the MMAT quality assessment on individual papers, the eligible studies were considered in terms of their generalized quality. Here, it was noted that the findings in two of the papers [40, 44] used a small sample size of less than 29 participants, and another two of the papers [39, 41] were based on user scenarios that did not directly engage active participants. Furthermore, only one of the quantitative studies was a randomized control trial (RCT) [42], which due to a reported clerical error had exclusion information missing in the randomization process. In addition to this, the demographic of the participants in both the quantitative studies [42, 43] was skewed toward non-Hispanic women. This limitation was raised in the first quantitative study [43] to be corrected in the randomized design of the second [42], having been conducted by the same researchers; however, this characteristic was carried through to the second study [42]. Given the small sample sizes, reliance on user scenarios, and inclusion of only one RCT study with missing information in the randomization process, the dependability of the conclusions that can be drawn on the efficacy of chatbots in the field of drug and alcohol addiction has been limited by the overall quality of the eligible papers.

Table 5.

Overview of Included Papers

There were six papers eligible for inclusion in the review, an overview of their characteristics is given in Table 4. Of the six papers, two exclusively used quantitative measures to study the adaptation of an existing chatbot platform for SUD [42, 43]. One applied a mixed methods approach to measure the efficacy of employing a bespoke chatbot to deliver addiction support [40]. One used thematic analysis to qualitatively gauge inputs from potential users, and service professionals, on the use of chatbots in the field of addiction, based on existing knowledge, and envisaged solutions [44]. The remaining two were useability studies, one of which focussed on how prominent chatbots, such as Amazon Alexa, Apple Siri, and Google Assistant, support addiction [39] and the other on the possibility of delivering a chatbot for opioid-addicted patients that is driven by existing big data [41].

Bespoke Chatbot Solutions

There were four studies looking at bespoke chatbots targeted at addiction [40-43]. The first publication, chronologically, was the paper by Elmasri et al. [40], which presented a chatbot developed as an intervention for alcohol abuse. Unlike the other bespoke studies, this chatbot was not assigned the identity of a named agent. An expert panel was gathered to provide the input for the design, whereby a set of requirements for the chatbot were identified, for example, anonymous and immediate advice, logical conversation, friendly advisor, and a mechanism for offering feedback relevant to an alcohol assessment. Using these requirements, a prototype chatbot was created using Artificial Intelligence Markup Language. The chatbot comprised 4 parts: (1) conversation initiation, (2) alcohol education module, (3) alcohol risk assessment, and (4) conversation conclusion. Conversation initiation and conclusion were achieved with simple predefined content. Alcohol education and risk assessment were implemented as conversation maps within the chatbot. The education process managed the dialogue to deliver user-specific information on drinking habits. Keyword identification was used to invoke a branch to the user-selected topic, with the chatbot initiating a further question to enable an accurate response containing appropriate information such as social risks, effect on organs, or alcohol content. The Alcohol Use Disorders Identification Test-Concise (AUDIT-C) measure was used for the risk assessment, with the chatbot using a knowledge base, storing answers, until it had gathered sufficient information to provide relevant feedback.

A total of 17 participants between the ages of 18 and 25 years, screened as low to medium risk drinkers (<5 drinks a day) took part in the study. Each participant was allocated 30 min to test and evaluate the chatbot, this included an introduction, brief demonstration, and an estimated 5 min to complete the education module and risk assessment individually. The remaining time was spent on the evaluation, where participants were asked to complete an 8-item satisfaction questionnaire using a 4-point Likert scale, based on the client satisfaction survey, and a structured interview. The client satisfaction survey results summary reported satisfaction to be generally high (M = 3.55, SD = 0.57), with no significance drawn against a control group. The interview results were grouped through topic analysis and categorized as positives, negatives, comments, and suggestions. Positives were elements that contributed to user satisfaction, negatives elements that produced undesirable effects, comments giving general feedback and suggestions, ways to improve the chatbot. It was reported that there was a good level of agreement among the participants with a number of strong positives, such as informative knowledge base (n = 15), simple guided conversation (n = 15), and quick response time (n = 8). User frustration with the chatbot was reported in the negatives, with too much information (n = 5), confusing conversation (n = 3), and undesirable interface (n = 5). No further evaluation was conducted. However, it was noted that the chatbot was generally received positively and that a more sophisticated model with a larger sample size would further enhance user satisfaction and enable more comprehensive statistical analysis.

The paper by Moghadasi et al. [41] presented a chatbot called Robo, which used big data taken from the social content aggregation platform Reddit. The dataset was amassed using a daily “crawler” module (programme to collect data from the Internet), looking for “subreddits” (dedicated topics), in areas such as drugs or opioids. This dataset formed a repository of “question and answer” pairs, with 7,596 questions and 12,898 answers, one question could have multiple answers. The length of the sentences was analysed to inform the decision on what model to adopt to encode the data for use by the chatbot. A deep averaging network architecture was selected, where the sentences were encoded by embedding them as a vector average (in natural language processing terms, the conversion of human text to a format understood by computers), this was fed through several layers, to computationally learn a higher level of abstraction in the representation of the data. From this, single-turn response matching (response based on last user input) was used, with the machine learning component, query semantic understanding (QSU), where the users’ queries were encoded and matched against the encoded dataset. The output of the QSU process is the highest scoring match based on a similarity calculation. Robo was given a web-based chatbot interface to interrogate the underlying dataset through the QSU component.

The Robo interface was tested using three real scenarios. The responses to these scenarios were considered as the measure of how effective the chatbot was in its current form. The first user case was a question on whether muscle-relaxing medication helps sleep and relaxation, a factually applicable response was returned regarding two medications, including details on tablet dosage. The second user case asked if chewing opiates make them work instantly, again a matched response was given with, “No, but it sure does speed them up.” The final user case was a question on whether ketamine and opiates can be used together. The response here was a suggestion of using a different drug, GABA, to mix opiates with, so only in part accurate to the scenario, although it was pointed out that this was an accurate response to mixing and misusing drugs. There was no further testing, evaluation, or discussion on the efficacy of the Robo chatbot; however, the conclusion stated that as a first attempt at using big data as a core data source, not all data will have been assimilated or even asked. The stated future intention was to accumulate more data, in addition to weighting the priority of responses from medical experts.

The first of the studies by Prochaska et al. [43] took an existing chatbot, called Woebot, designed to deliver interventions based on the principles of cognitive behavioural therapy and introduced customizations suited to SUD, such as motivational interviewing and cognitive behavioural therapy relapse prevention interventions. These interventions, known as W-SUDs, were developed as an 8-week programme, during which time the participants’ mood, cravings, and pain were tracked. A total of 101 participants, aged between 19 and 62 years (M = 36.8, SD = 10.0), took part in the study, after being positively screened using the Cut down, Annoyed, Guilty, Eye opener-Adapted to Include Drugs (CAGE-AID) Scale, for meeting the threshold for having problematic drug or alcohol use. Participants were also assessed as not having complex issues, such as drug-related medical problems, liver trouble, or a recent suicide attempt. Of the 101 participants, 51 completed both the pre- and post-study assessments, which consisted of the following measures: AUDIT-C, Drug Abuse Screening Test-10 (DAST-10), General Anxiety Disorder-7 (GAD-7), Patient Health Questionnaire-8 (PHQ-8), Brief Situational Confidence Questionnaire (BSC) for assessing confidence to resist drug/alcohol use. Participant opinion of the W-SUDs was also measured, using the Usage Rating Profile-Intervention scale (URP-I), the Client Satisfaction Questionnaire (CSQ-8), and the Working Alliance Inventory-Short Revised (WAI-SR).

Paired sample t tests were used to compare the participants’ pre- and post-treatment scores. The overall confidence scores in all areas of the BSC significantly increased, showing improved confidence in resisting drug/alcohol use (all p values <0.05). Drug and alcohol use had reduced significantly in the AUDIT-C (t = −3.58, p < 0.01) and DAST-10 (t = −4.28, p < 0.01) measures. The PHQ-8 and GAD-7 also showed significant improvement with t = −2.91, p = 0.05 and t = −3.45, p = 0.01, respectively. A reduction in frequency and severity of cravings across the 8-week programme was indicated with a McNemar test (p < 0.01). Additional analysis showed a greater decline in the AUDIT-C score with reduced alcohol use, PHQ-8 depression and GAD-7 anxiety, along with increases in BSC confidence. Similarly, greater decline in DAST-10 was associated with a reduction in PHQ-8 depression, but not with frequency of drug use or GAD-7 anxiety. It was also reported that participants currently in therapy only showed one statistically significant difference to those who were not and that was a greater reduction in depressive symptoms.

Satisfaction scores were generally high, for example, n = 35 reported that the W-SUDs had helped with their problems, n = 39 rated the quality of interaction highly, and n = 39 would recommend the W-SUDs. A lower number of participants (n = 21) rated the W-SUDs as having met most or all of their needs. The WAI-SR scores showed that participants rated bonding with Woebot higher, than agreement on tasks and goals. The CSQ-8 scores did not differ by participant characteristic; however, non-Hispanic white participants scored higher on the WAI-SR and URP-I scales. A reduction in drug and alcohol use pre- and post-treatment was also associated with higher scores in the WAI-SR (r = −0.37, p = 0.008) and URP-I (r = −0.30, p = 0.03), along with a greater reduction in cravings. Confidence to resist drug and alcohol use was also associated with higher scores on the WAI-SR (r = 0.30, p = 0.03) and the URP-I (r = 0.33, p = 0.02).

The principal findings in the first study by Prochaska et al. [43] showed significant improvement between the pre- and post-treatment assessment. It was also noted that the significant reduction in depression and anxiety was consistent with previous findings on the use of Woebot, as well as showing a reduction in substance use. Participants who scored higher in the CAGE-AID were more likely to complete the post-treatment assessment, no other measures showed this, so the conclusion was drawn by the authors that those in most need of help were more likely to use the W-SUDs and complete the programme. It was also observed that nearly 1,400 participants were excluded from the CAGE-AID screening process, due to low severity of alcohol and drug use, scoring less than the cut-off point of 2 for correctly identifying SUD with a specificity of 85% and sensitivity of 70%. This led the authors to suggest a need for early intervention in substance misuse. The participants were predominantly female (n = 76), employed (n = 73), and non-Hispanic white (n = 79). It was noted that future research should reflect a wider diversity. The first Prochaska report [43] stated that drug and alcohol use patterns and attitude to digital health interventions during the COVID-19 pandemic had not been considered within the study; however, the second W-SUD paper [42] was a follow-up study that introduced chatbot content for COVID-19 related stressors to see if using W-SUDs specifically in a study period affected by the COVID-19 pandemic, reduced substance use.

This study by Prochaska et al. [42] was an RCT which adopted a similar approach to recruitment, screening participants for problematic drug and alcohol use. This resulted in 180 participants aged between 18 and 65 years (M = 40.8, SD = 12.1) scoring above 1 in their CAGE-AID assessment, the threshold for inclusion in the study. The participants were randomized to either the W-SUD intervention (n = 88) or a wait list (n = 92), where access to the intervention was postponed for the duration of the 8-week study. The pre- and post-assessment used a primary outcome measure of substance use occasions and secondary outcome measures using the same scales as the first study (AUDIT-C, DAST-10, GAD-7, BSC, PHQ-8), in addition to two new measures. The Short Inventory of Problems – Alcohol and Drugs, which extended the assessment to include problematic use within the last 30 days and a measure to assess pandemic-related mental health effects. Due to a programming issue, DART-10 was not used as an outcome measure post-assessment. As with the first study, participant opinion was also measured using CSQ-8, WAI-SR, and URP-I.

General linear models were used to test for differences in outcome between groups, with the W-SUD participants showing statistically significant reduced substance use compared to those on the wait list (p = 0.035), as well as a reduction in the estimated marginal mean for substance use occasions in the previous 30 days, −9.6 (SE = 2.3) compared to −3.9 (SE = 2.2). No statistical significance was found between groups for the secondary outcomes during the study period, although participants in the W-SUD group had a two-fold confidence gain on the BSC measure, this was not statistically significant (p = 0.175). As with the first study by Prochaska et al. [43], a reduction in substance use occasions saw a statistically significant increase in confidence and reduction in substance use problems as well as pandemic-related mental health problems (p < 0.05). User satisfaction was again found to be high and when compared to the first study saw the metrics increase further. This included acceptability across all measures. It was observed the affective bond score on the WAI-SR measure was the highest in both studies, which was noteworthy as Woebot is a systemized agent. While the second study by Prochaska et al. [42] demonstrated there were no significant group differences resulting from the study period for the secondary outcomes, it did reinforce the effectiveness of W-SUD interventions in reducing substance use and associated substance use problems.

Addiction Support in Predominant Chatbots

Nobles and colleagues in a short paper [39] explored how predominant chatbots respond to addiction help-seeking requests. The study considered the five chatbots that make up 99% of the intelligent virtual assistant market: Amazon Alexa, Apple Siri, Google Assistant, Microsoft Cortana, and Samsung Bixby. Prior to making the help-seeking requests, the software for each device running the chatbot was updated to the latest version (January 2019), and the language was set to English US. The location for all tests was San Diego, CA, USA. There were 14 different requests, which were repeated verbatim by two different authors. The authors were native English speakers. These measures were taken to mitigate problems shown in previous research with chatbot comprehension of medical terminology. The requests all started with “help me quit…” and concluded with the substance type, for example, alcohol, drugs, painkillers, or opioids. The responses were assessed by (a) was a singular response given, (b) did the singular response link to an available treatment, or treatment referral service?

When the five chatbots were asked “Help me quit drugs,” only Alexa returned a singular response, which was the definition for “drug.” The others failed to provide a useful response, for example, Google Assistant responded with “I don’t understand,” Bixby executed a web search, and Siri said “Was it something I said? I’ll go away if you say “goodbye.”” Similar results were given across all chatbots and substance types, for instance, Cortana replied with “I’m sorry. I couldn’t find that skill,” when queried for alcohol and opioids. The exception to this was Siri, when asked “Help me quit pot,” responded with details of a local marijuana retailer. In line with the assessment criteria, only two singular responses were returned that directed users to treatment or a treatment referral service. Here, Google Assistant linked to a mobile cessation application, when asked about tobacco or smoking.

Of the total 70 help-seeking requests, only four resulted in singular responses. In the paper’s discussion, this is identified as a missed opportunity to promote referrals for addiction treatment and services, especially given the breadth and scope of what intelligent virtual assistants can accomplish. Possible reasons for this were reported as promoting health falls beyond the profit-driven objectives of technology companies. The algorithms required to implement this type of health initiative exceed the capability of the expertise within technology companies. Also that public health initiatives are not forming beneficial partnerships with technology companies. Furthermore, the report raised the ethical concern of responses being detrimental to public health, quoting the example of where Siri directed the user to a marijuana retailer, explaining that while this was partly due to location (tests were performed in San Diego, CA, USA), it was an example of potentially damaging advice being issued to someone trying to address an addiction.

Perspectives on Chatbots in Addiction

The paper by Barnett et al. [44] considers the perspectives of clients and counsellors regarding the technological and social effects of chatbots in alcohol and drug care. The theoretical approach employed in this research is based on affordances, which when originally applied to HCI suggest that designed-in features could signal how a technology can be used. It is reasoned by the authors that this HCI, involving human and non-human actors (user and chatbot), may combine differently as a technological experience, yielding opportunities (affordances) for what the technology may enable or constrain. By drawing on this, it can be postulated how chatbots might afford or constrain online drug and alcohol care. The research is further informed by the corpus of scholarship looking at “more than human” approaches to care, the conveyed motivation for which was to challenge traditional human-centric models for addiction treatment, which are uni-directional and subject to power asymmetries, and reframe them, looking at the dispersal of care through the everyday encounter of human and non-human actors, and how they collaborate with and shape one another.

There were 28 participants in the study, 20 clients (10 male and 10 female), aged between 22 and 78 years (M = 38), and 8 counsellors (5 male and 3 female), with a median of 2 years working for an online counselling service. Data were collected via a series of interviews and focus groups. The data were analysed using thematic analysis, where the themes were identified collaboratively and informed by the authors reading on affordances and “more than human” approaches. A strong theme emerged showing the participants’ concerns regarding the loss of empathy and mutual understanding from a non-human agent. It was questioned whether this type of perfunctory care was appropriate in drug and alcohol services, or whether it would undermine the goals of digitized health care in reaching a wider audience and removing barriers such as stigma. The participants were amenable to working in unison with a chatbot to complete more straightforward undertakings, such as collecting client histories or performing repetitive tasks such as triages. It was reported that some participants were concerned over chatbots impeding open and honest discourse, while others felt there were benefits in assuring confidentiality and privacy. The authors positioned this as an example of the affordances that chatbots offer HCI, where the interaction can emerge in different ways.

In recognizing the nuances and complexity of participant opinion, the authors proposed a “more than human” care model, one that distributes care provision between human and non-human actors. Their proposed model encompasses the continuing necessity of human input from trained professionals, such as counsellors, and enhances it with technological care agencies, such as chatbots. The intention is to offer quality care to a wider audience, an area that is reported in the study as being salient to drug and alcohol treatment, and one that justifies future research to maximize potential and minimize counterproductive outcomes in using digital health care as a contemporary mode of care.

The use of chatbots as supportive agents in the treatment of drug and alcohol addiction is an area of research in its infancy. Within this review, only six studies were identified as eligible for inclusion (see Fig. 1), despite the broad search term (“drug” OR “alcohol” OR “substance”) AND (“addiction” OR “dependence” OR “misuse” OR “disorder” OR “abuse” OR harm*) AND (“chatbot” OR “bot” OR “conversational agent”) AND “publication date” ≥ January 01, 2016 AND “publication date” ≤ March 27, 2022, and a rapidly growing corpus of work on the use of chatbots in healthcare. A similar verdict was drawn in the first study by Prochaska et al. [43] when it was noted that it was the opening study on a chatbot adapted for SUD.

The two studies by Prochaska et al. [42, 43] reported a reduction in substance use in the participants who engaged with Woebot and completed the W-SUDs, a reduction that was quantitatively corroborated with several well-established and reliable measures, such as the GAD-7, AUDIT-C, and PHQ-8. Relatedly, through qualitative assessment, paper by Barnett et al. [44] found people receptive to working with chatbots in the field of drug and alcohol addiction, recognizing the benefits this affords, such as confidentiality and privacy. A topic that was tempered with concern over losing human input, empathy and understanding, and constraints in open and honest communication, however.

Discussion

Principal Findings

The biggest obstacle to extending the use of chatbots in this area of healthcare, as reported in the papers reviewed, concerned the ethical implications of using a non-human agent in a supportive role. Paper by Barnet et al. [44] discussed the forfeiture of empathy and understanding in HCI. Research by Nobles et al. [39] showed how AI can be harmful without human reasoning, when Siri was asked for help quitting marijuana and directed the user to a place where they could purchase it. Similarly, work by Moghadasi et al. [41] ran user cases that actually gave responses advocating taking drugs in a certain way or mixing drugs for better effect. Both these studies [39, 41] use data that have not been assessed or validated as suitable for use in the treatment of drug and alcohol addiction. This offers repute to the findings in the papers concerned with bespoke chatbots developed specifically for addiction and SUD, as opposed to those which leverage existing resources, such as big data or the predominant chatbot platforms. Furthermore, in addition to the reduction in substance use reported in the papers by Prochaska et al. [42], the studies by Elmasri et al. [40] and Prochaska et al. [43] showed positive results when evaluating user feedback on engaging with chatbots specifically designed for SUD.

The potential for causing harm to an already vulnerable population presents a barrier to the future acceptance of chatbots within addiction services. As a point of concern, this was highlighted in two of the reviewed papers [39, 41], when both gave examples of a potentially damaging response having been sent to the end user. The need to monitor the safe usage of chatbots such as those reviewed is paramount given the rapid development lifecycle of such solutions and the complex and sometimes unpredictable disposition of having a target population with a history of problem drug and alcohol use. Compounding this is the lack of research conducted to date. Of the papers reviewed, only two [42, 43] had a longitudinal component, with the latter paper [42] validating the findings in a follow-up study on the use of W-SUDs. Chatbot implementations that have undergone a more robust validation process to establish a reliable and expert-informed evidence base are necessary for confidence in this type of digital intervention. With this confidence, addiction services can better afford support using chatbots to those they engage with, whether in a clinical capacity, as aftercare support, or as a remote treatment option.

Limitations and Future Research

This scope of this study included drug and alcohol addiction. Papers that covered other addictions, such as smoking and gambling, were not taken forward for review, so the opportunity to learn what advances have been made in these areas has not been appreciated. Furthermore, in the papers reviewed, there was a partiality toward studying the feasibility and acceptability of chatbots as opposed to empirical study of their use, with the paper by Barnett et al. [44] looking at the affordances of chatbots, the paper by Elmasri et al. [40] having a strong emphasis on user acceptance, and the papers by Moghadasi et al. [41] and Nobles et al. [39] assessing outcomes based on user cases.

None of the studies considered whether chatbots were more effective than alternate digital interventions, for example, systemized psychoeducation or the provision of online advice, also it was not possible to gauge improvement based on chatbot intervention as opposed to no intervention for the qualitative and mixed method studies. Furthermore, none of the papers used an active control group or clinically diagnosed participants, and the only RCT study employed a waiting list control. Given these methodological limitations along with the absence of longitudinal observation from baseline, the causal effect of chatbots on SUD is not determinable within the existing corpus of work. This exposes a gap in the current literature and an area for more transparent and in-depth study looking at the longer-term outcomes of using chatbots as an intervention for people with SUD using a more selective recruitment strategy and expansive design.

This review has highlighted that more work is required if chatbots are to safely leverage data that exist in the public domain. It has also shown that future chatbot solutions need input from those with an appropriate level expertise in the subject area to ethically ensure their suitability to their target audience. The papers aimed at developing bespoke chatbots, designed specifically for use in this area of healthcare did report some favourable results, and while the limitations discussed suggest this current literature base is not sufficient to direct future decisions on effective chatbot design, it does clarify necessary components for consideration in future work in this area.

Conclusion

This review sought to investigate the use of chatbots targeted at supporting people with an SUD. In doing so, it found the body of research in this field is limited, and given the quality of the papers reviewed, it is suggested more research is needed to report on the usefulness of chatbots in this area with greater confidence. Two of the papers reported a reduction in substance use in those who participated in the study. While this is a favourable finding in support of using chatbots in this field, a strong message of caution must be conveyed insofar as expert input is needed to safely leverage existing data, such as big data from social media or that which is accessed by prevalent market-leading chatbots. Without this, serious failings like those highlighted within this review mean chatbots can do more harm than good to their intended audience.

Statement of Ethics

An ethics statement is not applicable because this study is based exclusively on published literature.

Conflict of Interest Statement

Lisa Ogilvie, Julie Prescott, and Jerome Carson declare that they have no conflicts of interest.

Funding Sources

The authors did not receive any funding in relation to this study.

Author Contributions

Lisa Ogilvie: substantial contributions to the conception and design of the work and the acquisition, analysis, and interpretation of data. Julie Prescott and Jerome Carson: contributions to the design of the work; the acquisition, analysis, and interpretation of data; and agreement to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Data Availability Statement

The data that support the findings of this study are available by a named search of the data sources listed in Table 1. Further enquiries can be directed to the corresponding author.