Abstract

Every year, millions of patients worldwide undergo cognitive testing. Unfortunately, new barriers to the use of free open access cognitive screening tools have arisen over time, making accessibility of tools unstable. This article is in follow-up to an editorial discussing alternative cognitive screening tools for those who cannot afford the costs of the Mini-Mental State Examination and Montreal Cognitive Assessment (see www.dementiascreen.ca). The current article outlines an emerging disruptive "free-to-fee" cycle where free open access cognitive screening tools are integrated into clinical practice and guidelines, where fees are then levied for the use of the tools, resulting in clinicians moving on to other tools. This article provides recommendations on means to break this cycle, including the development of tool kits of valid cognitive screening tools that authors have contracted not to charge for (i.e., have agreed to keep free open access). The PRACTICAL.1 Criteria (PRACTIcing Clinician Accessibility and Logistical Criteria Version 1) are introduced to help clinicians select from validated cognitive screening tools, considering barriers and facilitators, such as whether the cognitive screening tools are easy to score and free of cost. It is suggested that future systematic reviews embed the PRACTICAL.1 criteria, or refined future versions, as part of the standard of review. Methodological issues, the need for open access training to insure proper use of cognitive screening tools, and the need to anticipate growing ethnolinguistic diversity by developing tools that are less sensitive to educational, cultural, and linguistic bias are discussed in this opinion piece. J Am Geriatr Soc 68:2207-2213, 2020.

1 BACKGROUND

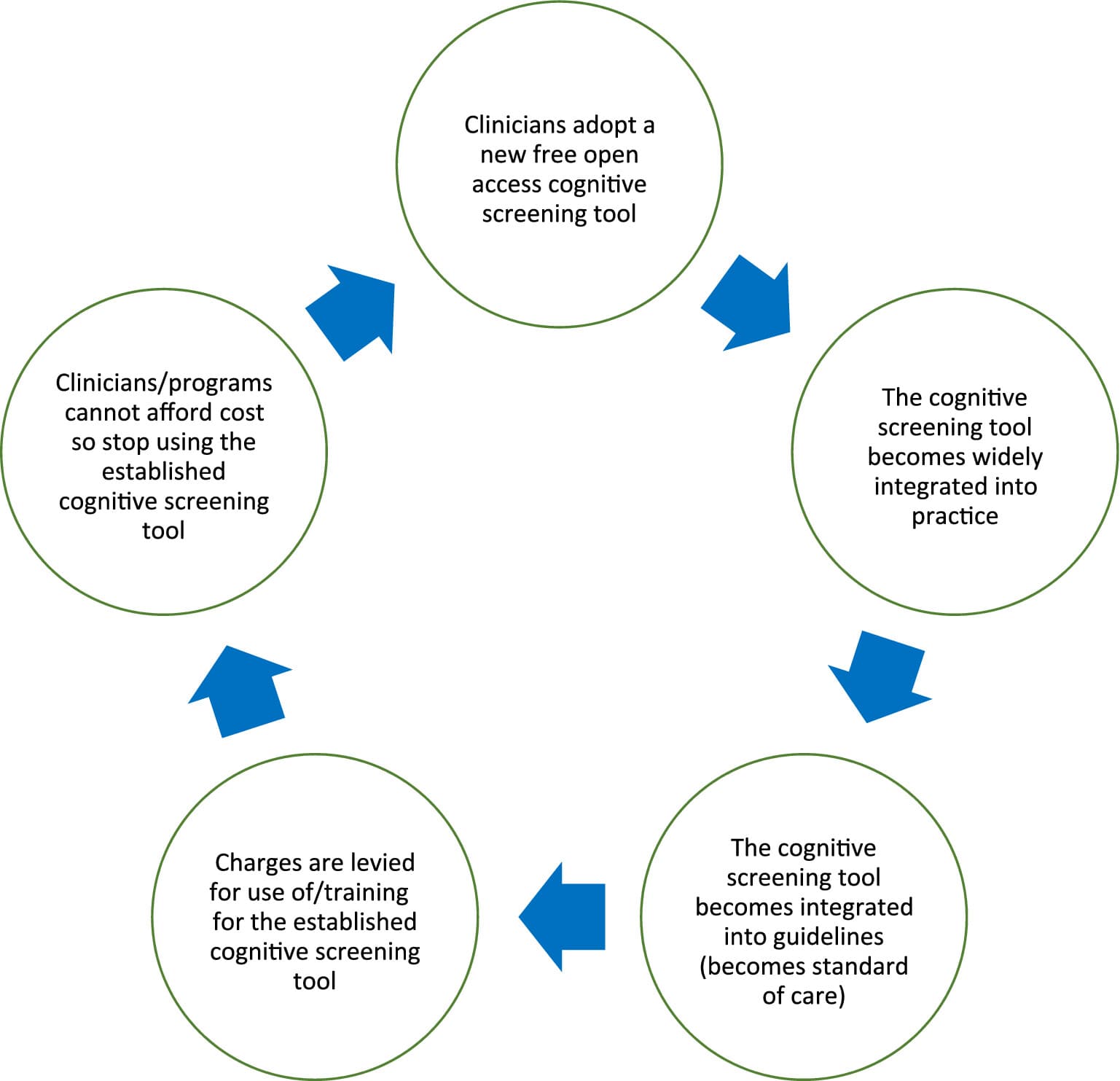

Every year, millions of patients worldwide undergo cognitive testing for a variety of reasons—one of the most common being to detect signs of dementia, which may impact on function and safety. Unfortunately, the strengths and weaknesses of the cognitive screening tools available to clinicians are often not well understood by some of the clinicians who apply them. Furthermore, new barriers to the use of free open access cognitive screening tools have arisen over time, making the choice and accessibility of tools unstable. In 2001, the intellectual property rights for the Mini-Mental State Examination (MMSE) were transferred to Psychological Assessment Resources (PAR Inc). When costs for use of the MMSE were levied, many healthcare practitioners switched to the Montreal Cognitive Assessment (MoCA), which then became the standard of care. In 2019, one of the developers of the MoCA announced plans to charge for access and training as of September 2020 (note: not all the developers of the MoCA are involved in this request). Many clinicians may stop using the MoCA, as they did the MMSE. We seem to be entering a “free-to-fee” cycle of instability, where free open access tests are integrated into practice and guidelines, and after widespread uptake, new fees are levied and then clinicians switch to other tests (see “free-to-fee” cycle in Figure 1). This article follows up on a prior editorial (www.dementiascreen.ca) by reviewing relevant methodological issues and the resultant tactics and investments required to break this cycle. This article is not a systematic review but rather an opinion piece that represents the culmination of 20 years of study, discussions with colleagues, and observations of the application of cognitive screening tools in clinical practice.

Figure 1

Emerging “Free-to-Fee” cycle to be avoided in the future.

1.1 Screening Tools Are Distinct from Diagnostic Tools

Screening answers the question “is a potential problem present?” Diagnosis answers the question “what is the cause/etiology of the problem if it is present?” These are two different questions.

The result of a screening test alone is insufficient to make the diagnosis of dementia and, conversely, one can make a diagnosis of dementia without a screening test, based on history and clinical examination. This article will focus on screening tools.

1.2 One Size Does Not Fit All: Different Types of Screening Tools May Be Suited to Different Circumstances (e.g., Different Settings and Different Patients)

Authors have described several approaches to screening:

Cognitive tests administered to patients (e.g., MMSE and MoCA),

Questions regarding cognition and function asked of proxy informants (e.g., Informant Questionnaire on Cognitive Decline in the Elderly (IQCODE) and AD8),

Functional assessments that use direct observation-of-test tasks that are more common in specialty settings where occupational therapy is available,

Screening by a self-administered questionnaire,

Clinician observations of behavior suggesting cognitive impairment.

7

Each of these approaches have complementary roles.

Cognitive tests administered to patients are useful “since many older patients do not arrive at the doctor's office with someone who has known them well for a long time and is willing to discuss the subject's failing abilities (which may be against cultural or personal norms).”

Proxy informant-based questions provide longitudinal information (change over time) and may be less sensitive to education or culture if following patients from their own functional baseline. They require reliable and accurate informants.

Both proxy informant-based questions and functional assessments may be useful when cognitive tests administered to patients are inaccurate due to ethnolinguistic diversity, which is growing rapidly in many counties.

Theoretically, both proxy informant-based questions and screening by a self-administered questionnaire may be started in the waiting room, thereby saving clinician time.

Clinician observations of behavior suggesting cognitive impairment7 take little to no time and may trigger some of the previously mentioned screens.

1.3 Myth Busting: Psychometric Properties (e.g., Sensitivity, Specificity, Positive Predictive Value, and Negative Predictive Value) of Cognitive Screening Tools Are Not as Stable or Predictable as We Like to Believe

Factors that alter psychometric properties of cognitive screening tools were outlined in www.dementiascreen.ca and are described in greater detail in Table 1.

Table 1. Factors that Alter Psychometric Properties (e.g., Sensitivity, Specificity, PPV, and NPV) of Cognitive Screening Tools (Based on www.dementiascreen.ca)

1. | Spectrum of disease (type(s) and severity of dementia in population studied) |

2. | Prevalence of disease (likely lower prevalence in primary care than in geriatric clinics, memory clinics, and geriatric day hospitals). This means psychometric properties (e.g., sensitivity, specificity, PPV, and NPV) may be significantly different in such specialty clinics relative to values in primary care settings. |

3. | Setting (e.g., community vs primary care vs emergency department vs in hospital vs long-term care vs specialty clinic). |

4. | Cutoff (cutpoint) adopted—for tests where 0 indicates severe impairment and higher scores reflect better cognition (e.g., MMSE and MoCA); as one raises the cutoff, the sensitivity increases (more people with disease fall below the cutoff and are detected) but the specificity decreases (more people free of disease also fall below the cutoff and are falsely labeled as impaired). There is a trade-off of sensitivity vs specificity; as one increases, the other tends to decrease. |

5. | What one is screening for: dementia, MCI, or cognitive impairment in general (due to dementia, MCI, delirium, and/or depression). |

6. | Language: cognitive tests administered to patients and questionnaires applied to proxy informants represent language-based testing that must be validated independently in each language (i.e., it is not valid to merely translate and retain the same cutoff scores as some test developers have done). |

7. | Culture—screening tools are often developed in specific cultures and may not work as well in cultures they have not been developed and validated in. |

8. | Education—the impact of education on cutoff scores is well known. Some screening tools provide compensatory scoring approaches. |

9. | Targeting: whether one applies the screen to all comers (the traditional definition of population screening) or only to higher-risk individuals (e.g., targeting those with advanced age (>75- and >85-year cutoffs have been cited), vascular risk factors, late-onset depression, family history, and subjective memory complaints). |

Abbreviations: MCI, mild cognitive impairment; MMSE, Mini-Mental State Examination; MoCA, Montreal Cognitive Assessment; NPV, negative predictive value; PPV, positive predictive value.

Point 9 in Table 1 regarding who to screen (i.e., targeting) can create even more variability and unpredictability in the psychometrics of cognitive screening tools.

Rather than screening all comers, several experts have suggested screening based on risk stratification. Brodaty and Yokomizo and colleagues suggested screening those aged 75 years and older. Ismail et al expanded on this by suggesting that screening be based on both age and comorbidities associated with dementia.

Employing the above risk stratification will alter psychometrics of cognitive screening tools—sensitivity and specificity will not be the same in a practice employing these risk stratification criteria as in studies that did not employ the same risk stratification.

The bottom line is that sensitivity, specificity, positive predictive value (PPV), and negative predictive value (NPV) can vary widely from study to study (making studies hard if not impossible to compare) and from clinical setting to clinical setting (meaning test accuracy in one's own clinical setting may be different from study psychometrics). These psychometric values can vary even more as clinicians employ different targeting criteria regarding who they will and will not screen if the same targeting criteria were not used in the studies.

Sensitivity, specificity, PPV, and NPV are not as stable or fixed as we like to believe. Rather, they can vary widely and are dependent on the factors outlined in Table 1. At most, one can say that a cognitive screening test is reasonable to use if it has demonstrated acceptable sensitivity and specificity in a setting similar to the one in which one is practicing with similar patients and similar targeting criteria.

Selection of cognitive screening tests for clinical practice therefore cannot be based solely on potentially unstable psychometrics (e.g., sensitivity and specificity). Clinicians require additional criteria to help them select the most appropriate cognitive screening tools for their practice, as described below.

1.4 The PRACTICAL.1 Criteria to Help Clinicians Make Informed Practical Selections

This article follows up on a prior editorial with the PRACTICAL.1 criteria (PRACTIcing Clinician Accessibility and Logistical Criteria Version 1), which represent selected, modernized versions of some of Milne's Practicality, Feasibility, and Range of Applicability criteria.

The PRACTICAL.1 criteria include 7 filters:

Filter 1: Remove tests not validated in multiple studies for recommended setting.

Filter 2: Remove tests that charge for use, training, and/or resources to apply the test.

Filter 3: Remove tests with significant challenges in scoring.

Filter 4: Remove tests without short-term memory testing.

Filter 5: Remove tests without executive function.

Filter 6: Remove tests that take longer than 10 minutes.

Filter 7: Remove tests taking longer than 5 minutes.

1.5 Illustrating How to Apply the PRACTICAL.1 Criteria to Select Tools that Are Most Feasible in Frontline Clinical Practice

The U.S. Preventive Services Task Force (USPSTF) systematic review focused on cognitive screening tools validated in at least two primary care settings. This review selected 16 cognitive tests: very brief (≤5 minutes), brief (6–10 minutes), and longer (>10 minutes) cognitive screening tools. Very brief tests included six tests administered to patients (e.g., the Clock Drawing Test (CDT), Lawton Instrumental Activities of Daily Living (IADL) Scale, Memory Impairment Screen (MIS), Short-Portable Mental Status Questionnaire and Mental Status Questionnaire (SPMSQ/MSQ), Mini-Cog, and Verbal Fluency) and two proxy informant-based tests (e.g., AD8 Dementia Screening Interview and Functional Activities Questionnaire (FAQ)). Brief tests included six additional tests administered to patients (e.g., MMSE, 7-Minute Screen (7MS), Abbreviated Mental Test (AMT), MoCA, the St. Louis University Mental Status Examination (SLUMS), and the Telephone Interview for Cognitive Status (TICS/modified TICS)). Long tests included the IQCODE (26-item IQCODE and the 12-item IQCODE-short).

Starting with a list of 16 USPSTF options meeting filter 1 (validation in multiple studies for recommended setting), if we apply filter 2, removing tests that charge for use, training, and/or resources to apply the test (e.g., MMSE, MoCA, AMT, and TICS), we are left with 12 options: CDT, 7MS, SPMSQ/MSQ, Verbal Fluency, MIS, 26-item IQCODE and the 12-item IQCODE-short, SLUMS, Mini-Cog, Lawton IADL, AD8, and FAQ.

If we then apply filter 3, removing tests that have significant challenges in scoring (e.g., CDT, 7MS, SPMSQ, and MSQ), the list decreases to nine options: Verbal Fluency, MIS, 26-item IQCODE and the 12-item IQCODE-short, SLUMS, Mini-Cog, Lawton IADL, AD8, and FAQ.

Loss of short-term memory is the presenting feature of Alzheimer's dementia. Many clinicians would likely feel screening tests should therefore directly test short-term memory. Applying filter 4, by removing tests without short-term memory testing (Verbal Fluency), reduces the list to eight options: MIS, 26-item IQCODE and the 12-item IQCODE-short, SLUMS, Mini-Cog, Lawton IADL, AD8, and FAQ.

Some clinicians may feel screening tests should test executive function to detect loss of ability to manage medications, driving risk, as well as to detect dementias with frontal lobe dysfunction (e.g., frontotemporal dementia, Lewy body dementia, and Parkinson's dementia). Applying filter 5, by removing tests without executive function testing (MIS), further reduced the list to seven options: 26-item IQCODE and the 12-item IQCODE-short, SLUMS, Mini-Cog, Lawton IADL, AD8, and FAQ.

Time pressure in primary care is often more demanding than in specialty clinics. If we apply filter 6, by removing tests that take longer than 10 minutes, such as the 26-item IQCODE and the 12-item IQCODE-short, then we are left with five options: SLUMS, Mini-Cog, Lawton IADL, AD8, and FAQ.

Some clinicians may desire very brief tests. Applying filter 7 by removing tests taking longer than 5 minutes selects out the SLUMs, thereby reducing the list to four options: Mini-Cog, Lawton IADL, AD8, and FAQ.

How (i.e., the sequence of filters to employ) and how much (i.e., how many filters to employ) one wishes to shrink the field of candidate tools depends on the above decisions—the PRACTICAL.1 criteria empower clinicians to make these filtering decisions when selecting cognitive screening tools for their clinical practice.

2 DISCUSSION

In addition to the 16 tests selected by the USPSTF, others have recommended the Modified Mini-Mental Examination (3MS), a four-item version of the MoCA, and the Rowland Universal Dementia Assessment Scale (RUDAS) all of which will be addressed below.

The 3MS is a 100-point scale that is likely too long for primary care practice. The Ottawa 3DY (O3DY) is a brief cognitive screening tool, derived from the 3MS, composed of four questions that do not require equipment, paper, or pencil (O3DY is purely verbal). The 3DY questions are Day of the week, Date, DLROW (WORLD spelled backwards), and Year. The psychometric properties have been reported in emergency department studies. The O3DY has been validated in French. Rather than studying the long 3MS in primary care settings, perhaps its shorter derivative, the O3DY, may be more suited to primary care validation research and, if found to be valid, primary care practice.

The four-item version of the MoCA has just completed the derivation stage. To our knowledge, it has not yet been validated in multiple primary care settings. It is premature to recommend this test for widespread use in primary care. Given the upcoming cost to use the MoCA, it is unclear if a charge will be attached to the use of shorter versions of the MoCA.

As points six to eight in Table 1 demonstrate, language, education, and culture can have major impacts on the psychometric properties of cognitive screening tools. As the number of seniors with limited English proficiency continues to grow, we will increasingly need cognitive screening tests that are less affected by education, language, and culture. Ismail et al identified the RUDAS as being “relatively unaffected by gender, years of education, and language of preference.” Although this screening test is lengthy, it may prove helpful in select situations in primary care settings or in specialty settings where more time and resources are available.

3 CONCLUSION

In preparing this article, the authors were repeatedly asked “what single test should all clinicians use … what is the best test?” These questions are revealing. Perhaps we have been unwisely overreliant on one test. Searching for the holy grail of the one best test may be folly. The reality is there are different complementary approaches to screening that work in different situations and for different patients, as described above—one size does not fit all.

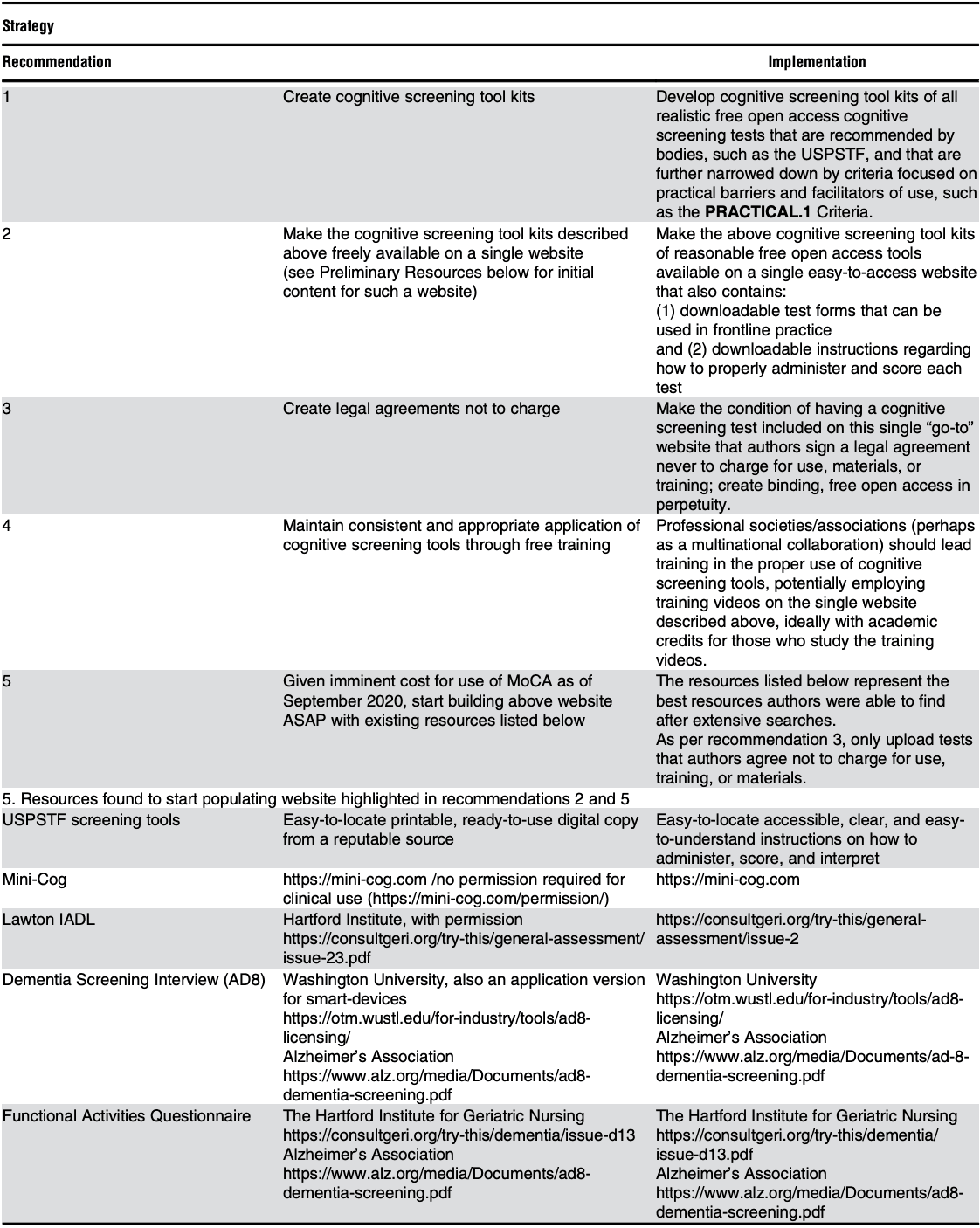

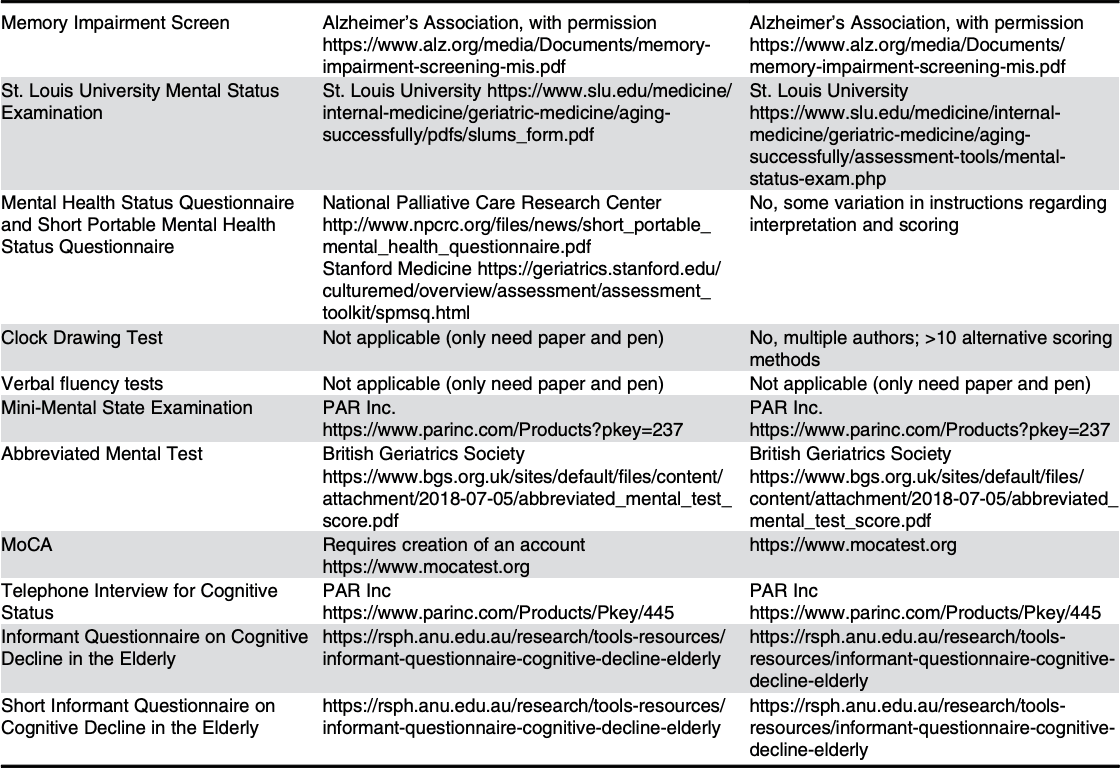

Research alone will not break the “free-to-fee” cycle depicted in Figure 1. To facilitate change, we recommend the steps outlined in Table 2.

Table 2. Steps Required to Break the “Free-to-Fee” Cycle Depicted in Figure 1

Abbrevitions: ASAP, as soon as possible; IADL, Instrumental Activities of Daily Living; MoCA, Montreal Cognitive Assessment; PRACTICAL.1, PRACTIcing Clinician Accessibility and Logistical Criteria Version 1; USPSTF, U.S. Preventive Services Task Force.

To further empower readers, Table 2 includes links to the above mentioned screening tools, thereby allowing readers to better familiarize themselves with the tools to inform their selections.

In addition to providing guidance to clinicians, we are also hopeful this article will serve as a call to action for our national professional organizations as well as granting agencies funding the development and validation of cognitive screening tools.