Abstract

In 2012, the United States Supreme Court struck down existing legislative statutes mandating life without parole sentencing of convicted homicide offenders under age 18. The Court’s core rationale credited research on brain development that concludes that juveniles are biologically less capable of complex decision-making and impulse control, driven by external influences, and more likely to change. Closer scrutiny of the research cited in the defendants’ amicus brief; however, reveals it to be inherently flawed because it did not include relevant populations, such as violent offenders; utilized hypothetical scenarios or games to approximate decision-making; ignored research on recidivism risk; made untenable leaps in their interpretation of relevance to the study of homicide, and failed to include contradictory evidence, even from the brief’s authors. In forensic assessment, a blanket assumption of immaturity based on a homicide offender’s age is not appropriate, as research has demonstrated that in relevant respects, older adolescents can be just as mature as adults. An individualized and thorough assessment of each juvenile offender, including an analysis of personal history, behavioral evidence such as pre, during, and post crime behavior, and testing data more accurately inform questions of immaturity and prognosis in juvenile violent offenders.

Introduction

In Miller v. Alabama, 567 U.S. 460 (2012), the United States Supreme Court struck down existing legislative statutes mandating life without parole (LWOP) sentences for convicted murderers under 18. The Court opinion cited a range of influences for its decision that “juveniles have diminished culpability and greater prospects for reform” (Miller, 2012, p. 8). The Miller Court principally referenced the Court’s previous decisions in Roper v. Simmons, 543 U.S. 551 (2005), in which it outlawed the death penalty for convicted murderers under 18; and Graham v. Florida, 560 U.S. 48 (2010), when it disallowed LWOP for non-homicide juvenile offenders. The core rationale, originating in Roper, and extending through Miller emphasized that:

Juveniles are less capable of mature judgment.

Juveniles are more vulnerable to negative external influences.

Juveniles have an increased capacity for reform and change.

Juveniles have psychosocial immaturity that is consistent with research on adolescent brain development.

Sources of influence on the Miller Court’s decision also included a brief of amici curiae submitted jointly by the American Psychological Association (APA), the American Psychiatric Association, and the National Association of Social Workers. In the previous Roper and Graham proceedings before the Court, the APA also submitted amici curiae briefs in support of the petitioners, with contribution from a number of the same authors as Miller. Unlike its decision in Roper relating to capital punishment, in Miller, the Court did not impose a categorical ban on sentences of LWOP for people under 18. Rather, the Court protected a given defendant’s recourse to have the judge consider the potential significance of their youth and attendant characteristics before imposing a penalty. However, following the Miller decision, numerous states categorically banned LWOP sentencing for juvenile offenders, even in cases of homicide. At the time of this review, 23 states including the District of Columbia have banned LWOP sentencing for juveniles, many citing the Miller decision in their rationale (The Campaign for the Fair Sentencing of Youth, 2020). Virginia is the most recent state to retroactively ban LWOP sentencing for juveniles, with the new law providing parole review for youth offenders after 20 years (Virginia House Bill No. 35, 2020).

The Miller amicus brief did not introduce any research to demonstrate the limitations of those above age 18. It argued, essentially, that special sentencing considerations are warranted for those less than 18 years old. However, after Miller, some have argued that sentence relief be applied to defendants into their 20’s (Tutro, 2014). The amicus briefs in support of Roper, Graham, and Miller, however, cited numerous research studies that are inherently flawed in their methodology, scientific rigor, and applicability to homicide committed by those 18 and under.

Scientific understandings relevant to criminal maturity engage distinct expertise from diverse specialties. This includes what is known about how people change as they age, why these changes take place, the role of neurobiology and other developmental and social factors on violence, criminal deviance, and what signifies maturity as it relates to violent crime, and, in particular, homicide. This paper provides a review and analysis of the salient scientific research, data, historical evidence, and advances in the various specialties informing violent criminal maturity among juveniles. Through this review, this paper will study each of the four main arguments that were the basis for the Miller decision, identify methodological flaws in the cited research, and provide updated evidence and research where applicable. The paper concludes with a recommendation for appropriate forensic assessment of juvenile homicide offenders to inform sentencing decisions.

The Miller Brief

Mature Judgment in Juveniles

In the Miller amicus brief, the authors argued that juveniles are less capable of mature judgment, which leads to involvement in more risky and reckless behaviors, including criminal behavior. Support for these conclusions includes the age-crime curve and studies examining antisocial behavior across the lifespan. The amicus brief authors further asserted that research that shows juveniles are less capable of self-regulation (i.e., less able to resist their impulses), respond differently to perceptions of risk and reward, and are unable to weigh the consequences of their behavior. However, the studies touted relied upon in the Court decisions in Miller (and Graham and Roper before it) are inherently flawed. Many studies relied on self-reported measures, utilized convenience samples of college students, administered edited versions of instruments that were not psychometrically tested and verified, relied on measures of risk-taking behavior based on hypothetical situations, and are not generalizable to homicide or the study of homicide offenders because they lack ecological validity.

The Age-Crime Curve

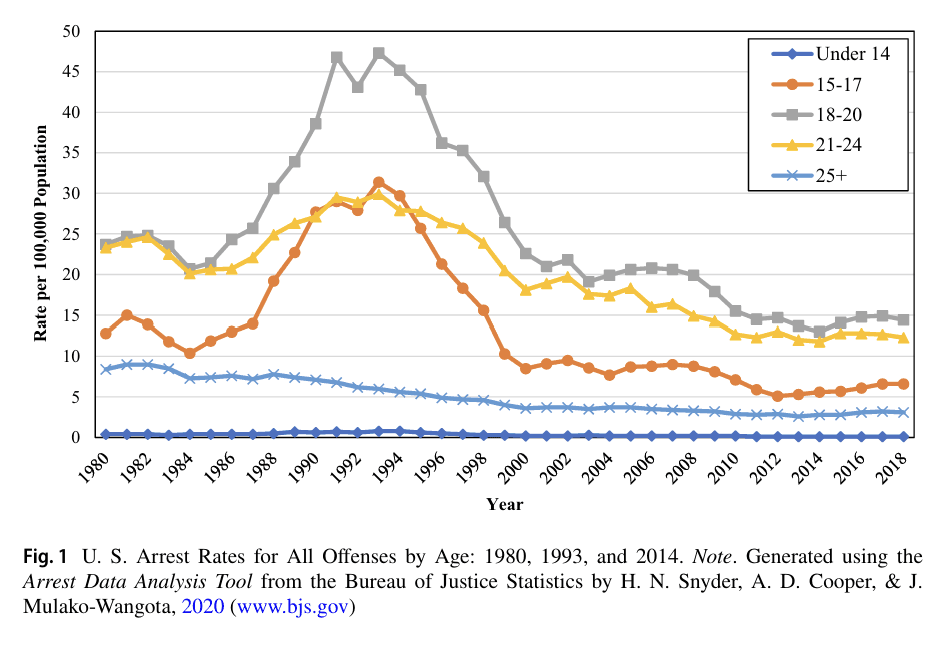

In Roper and again in Miller, the Court indicated that juveniles were overrepresented in almost every category of reckless behavior. In 1992, Arnett provided evidence that juveniles were more likely to engage in four types of reckless behavior including speeding while driving under the influence, using illegal drugs, having unprotected sex, and engaging in minor criminal activity such as theft and vandalism. These behaviors, while potentially indicative of antisocial conduct, do not remotely approach the severity of homicide. Arnett also included the early 20s in his definition of adolescence, which does not parallel the age group of interest in Miller. In her seminal developmental taxonomy, Moffitt (1993, citing Blumstein et al., 1988) provided index arrest rate data from 1980 showing that the prevalence and incidence of offending appeared highest in adolescence, peaking at age 17. The relationship between age and crime has been referred to as the “age-crime curve,” which visually resembles a positively skewed distribution, with crimes gradually decreasing with increasing age. In their argument that juveniles are less mature, the Miller brief indicated the age-crime curve was the most salient finding across studies.

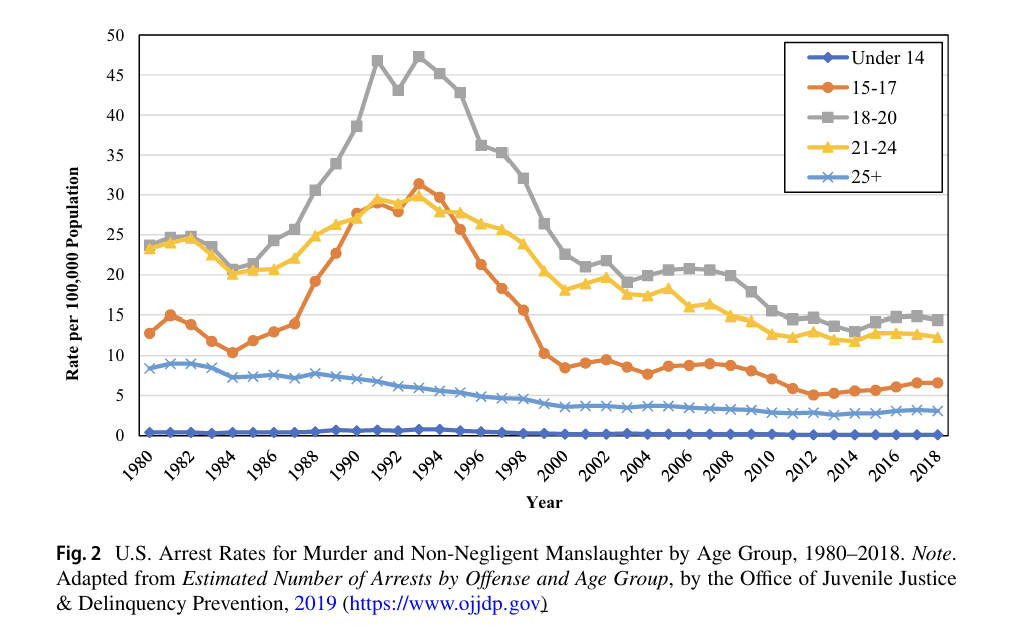

Analysis of data from the Bureau of Justice Statistics and the National Center for Juvenile Justice, however, does not support this assertion. Age-arrest curves from 1980, 1993, and 2014 (Fig. 1) show that although there is an increase in rates over the adolescent years, the peak occurs at age 18 or 19 (Snyder et al., 2020). From 1980 to 2018, 18–20-year-olds had the highest arrest rates for murder and non-negligent manslaughter. On average, the arrest rate for 18–20-year-olds across this 38-year time span was almost twice as high as the average rate for 15–17-year-olds for this type of offense (Office of Juvenile Justice & Delinquency Prevention, 2019). The age-crime curve as it pertains to murder and non-negligent manslaughter has also been demonstrably flattening in recent years. In 2014, national homicide rates declined precipitously among adolescents compared to 1993 (Snyder et al., 2020).

Furthermore, from 1980 through 2018, arrest rates among young people for murder and non-negligent manslaughter showed significant fluctuation. Arrests of those aged 15–17 tripled between 1984 and 1994 (Fig. 2). Then, the arrest rate receded by nearly 70% over the next six years. During the same 1984–1994 period, those aged 18–20 had a similar large jump and over the years since, those homicide arrest rates have significantly receded to one-third of the peak in 1994. When rates receded, the 15–17-year-old group dropped back to being below the 21–24-year-old group. The 18–20-year-old group continued to claim the highest number of homicide arrests through 2018 (Office of Juvenile Justice & Delinquency Prevention, 2019).

Researchers have hypothesized that the fluctuations may be due to the crack cocaine epidemic (Blumstein, 1995; O’Brien & Stockard, 2009), cohort replacement effects (O’Brien & Stockard, 2009), changes in medical and trauma care (Harris et al., 2002), and gun use and increased access to guns (Cook & Laub, 1998). If maturational delay were to account for the disparity between age groups, as argued by the Miller brief authors, there would be no pronounced dipping, and the distribution of homicides would be quite different. The neurodevelopmental expectation of the brain and behavior would not anticipate dramatic large-scale changes in reward seeking qualities, considerably less control, and more impacted judgment from pre- to post-1984 unless America were experiencing a cohort of higher lethality.

O’Brien and Stockard (2009) showed that cohort replacement accounted for less than half of the increase in youth homicides in the 80 s and 90 s. With homicide rates sharply dropping as they have, time has demonstrated that cohort replacement is not a proper explanation for the rise, and the disparity between age groups continues to shrink. Furthermore, the statistical trend of the age-crime curve disappears when controlling for socioeconomic status. In a recent analysis, Males (2015) investigated 54,094 California homicide deaths and found that, when controlling for poverty, the peak in late adolescent/young adult offending was only seen in high poverty groups; the age-curve was essentially flat in the other economic groups. This finding further underscores that age is more of a salient factor in impoverished areas.

Research on Maturity

The Miller brief further argued that adolescents’ judgement and decision-making capacities differ from adults with regard to impulse control, perceptions of risk and reward, and perception of consequences, which ultimately impacts their ability to make mature decisions. Dual-systems theory suggests that reward seeking behavior surges in early to mid-adolescence when self-regulation or cognitive control has yet to fully develop (Steinberg, 2010). If this theory were germane to adolescent homicide, homicide would peak in mid-adolescence (i.e., ages 15–17). As explained above, however, this is not the case, with crime, and homicide specifically, peaking around ages 18–19. A more recently published study from proponents of the dual systems theory concedes that the relationship between reward processing and cognitive control may not be reliably observed (Duell et al., 2016).

The Miller brief authors have also, elsewhere, contradicted claims made in the brief within their own research. For example, Albert and Steinberg (2011) noted that adolescents demonstrate decision-making competence, do not differ from adults on evaluating risk information, are capable of engaging necessary systems when making judgments and decisions, and have logical reasoning and information processing competence similar to adults by ages 15 or 16.

Moreover, a close examination of the supporting studies in the Miller brief reveals methodological flaws which belie the significance of the authors’ research. For example, the Miller brief cited Cauffman and Steinberg (2000) to assert that adolescents are less capable of self-regulation than adults, which impacts their ability to control their social and emotional impulses. The referenced study involved administering self-report questionnaires purported to assess “responsibility,” “perspective,” and “temperance” to 1,015 junior high, high school, and college students. On its face, if respondents are potentially less self-aware of how others might see them because of their adolescence, the very protocol of comparing information elicited only by self-reflection of adolescents as opposed to adults is unreliable.

Researchers compounded this methodological handicap by altering a standardized test without performing necessary additional standardization to see whether the scale maintained its psychometric properties. These scores from the above and other measures were aggregated to produce a composite measure of “psychosocial maturity.” Antisocial decision-making was assessed by presenting the respondents with five hypothetical situations that involve choosing between antisocial and socially acceptable courses of action such as joy-riding and shoplifting. There is a fundamental difference between involvement in behavior such as driving a fast car and committing homicide (Davidson, 2014–2015).

The study of moral cognition has frequently relied on hypothetical scenarios. However, the only way to know if these are good approximations of actual moral behavior is to study behavior in relevant environments where the stakes are high, emotionally charged, immediate, and tangible (FeldmanHall et al., 2012; Kang et al., 2011; Teper et al., 2011). One study found that people were more likely to inflict harm for monetary gain under real conditions than hypothetical ones, even though participants believed that people would be less likely to inflict harm in real conditions. The authors also concluded that situational and complex contextual cues were very important factors in real-world conditions, which are not as easily replicated or available in hypothetical moral probes (FeldmanHall et al., 2012). The Miller brief authors themselves have explicitly and specifically indicated that their laboratory studies cannot replicate real-world behavior (e.g., Botdorf et al., 2017; Gardner & Steinberg, 2005; Shulman et al., 2016).

Furthermore, some of the most emphasized studies of the Miller brief utilized self-report measures (e.g., Cauffman & Steinberg, 2000; Cauffman et al., 2010; Steinberg, 2008; Steinberg & Scott, 2003; Steinberg et al., 2009). Critics and proponents of self-report measures agree that there are flaws with this methodology and that systematic bias may exist in reporting (Krohn et al., 2010). As early as the 1970s, self-reported measures touched on a different domain of delinquent behavior – namely activity that would not lead to arrest (Hindelang et al., 1979). To examine the potential for systematic bias, many studies were conducted over the next few decades. In a longitudinal study of 1,000 children, interviewed between the ages of 14 to 18, the authors found that the more arrests a person had, the more likely they were to under-report said arrests. This relationship existed regardless of race or gender (Krohn et al., 2013). These findings underscore the importance of not relying solely on self-report for assessing delinquent behavior, especially for serious offenders. Even outside the realm of criminality, self-report measures are susceptible to biases including social desirability bias, agreement bias, and deception (Supino et al., 2012).

The Miller brief highlighted what it characterized as psychosocial immaturity, citing Steinberg’s (2008) self-report study of subjects ranging in age from 10 to 30. However, this study lacked ecological validity, in that study conditions did not approximate the real world, which ultimately impacts the generalizability of the findings. The cited research mostly relied on middle, high school, or undergraduate student participants or community center volunteers who had taken the initiative to respond to posted ads. Reliance on college students and other convenience samples is common in social science research; however, generalizability to non-student populations is problematic, given the inconsistencies in findings (Peterson, 2001; Peterson & Merunka, 2014). The subjects, like other relied upon data sets in the Miller brief, contain few, if any, major violent offenders such as those who commit homicide. There is not adequate antisocial variance in these populations to produce findings that would have external validity relevant to homicide offenders about whom questions of criminal maturity and identity are raised.

Steinberg and colleagues reported cognitive capacity reaching a plateau on par with adulthood at about age 16, and a linear increase in psychosocial maturity from about age 14 to the mid to late 20 s (Steinberg, 2009). However, the article on their study findings graphically illustrates that about one-quarter of late adolescents scored at or above the mean for adults in psychosocial maturity. Their own findings indicate that a significant minority of adolescents are just as mature as adults (Steinberg et al., 2009). Cauffman and Steinberg (2000) also concluded that maturity of judgment was more predictive of antisocial decision-making on the hypothetical scenarios than age alone. A critique of Steinberg et al., (2009) further articulated that self-report on narrow and biased assessments of risk perception, sensation seeking, impulsivity, resistance to peer influence, and future orientation cannot be generalized to one’s capacity to make decisions about criminal behavior (Fischer et al., 2009). This is a strong argument against any automatic presumption of immaturity for all adolescents.

Theories that have been applied to the scientific-legal consideration of homicide have also relied heavily on games that are said to simulate risk. For example, one study utilized the Balloon Analogue Risk Task (BART) to assess reward seeking, self-regulation, and prediction of risk taking (Duell et al., 2016). Participants were instructed to press a space bar on a computer to pause the inflation of a balloon before it bursts, with higher inflation ratios indicative of greater risk taking. The Chicken game is a video game in which a participant controls an animated car. Players are told that after a yellow light appears, the light will turn red at some point and a wall will pop up in front of the car. The object of the game is to move as far as possible after the yellow light without crashing into the wall. In the group condition, peers can call out advice, but the player makes the decision about whether to stop the car or not (Gardner & Steinberg, 2005). The Chicken game was developed at the academic affiliation of the authors, with no evidence that this test had been peer-reviewed outside of a graduate psychology program. Furthermore, there is no evidence to suggest that tasks are relevant to criminal decision-making and other thought processes of an accused homicide offender undergoing assessment.

Validation research on neuropsychological and psychological tests has demonstrated that the performance of juveniles is often on par, if not better, than the performance of adults (e.g., Delis et al., 2001; Harrison & Oakland, 2015; Wechsler et al., 2008). Not surprisingly, the Miller brief’s arguments have not impacted or altered psychological assessment on a day-to-day level in the clinical, forensic, or research domains – because those standardized and oft-used metrics have not manifested the performance differences claimed by the dual systems theory proponents. These tests have not identified any need to be re-normed with new age categories to account for differences in biological and social maturity. Thus, the arguments made in the Miller amicus brief have served as advocacy to the courts while making no impression within the responsible scientific community of testing relative strengths and weaknesses on the same domains.

The APA, in the Miller brief, advocated for juveniles’ lessened culpability due to their lack of maturity. Yet the APA had earlier argued the exact opposite position, of juvenile maturity, when it took up an amicus brief in Hodgson v. Minnesota, 497 U.S. 417 (1990). Hodgson challenged a state law that would allow abortions for a woman under the age of 18 after 48 h, but only with two-parent notification. The Hodgson Court referenced its 1976 decision in Planned Parenthood v. Danforth, 428 U.S. 52, 74 (1976) in which it held that the right of a pregnant minor to decide to terminate her pregnancy “does not mature and come into being magically only when one attains the state-defined age of majority” (p. 434–435). The Court also noted that Minnesota courts had ruled that women under the age of 18 years old who filed for judicial bypass were sufficiently mature and capable in 99.6% of 3,576 cases. The APA, in the Hodgson brief, specifically indicated that juveniles’ capacity to form moral principles against which to judge behavior and decisions is fully developed by ages 14–15.

To explain this apparent “flip-flop”, the Miller brief authors created and then parsed two types of maturity – cognitive and psychosocial. The authors suggested that psychosocial maturity is instrumental to homicide, but only cognitive development is operative for decision-making in mothers contemplating abortion (Steinberg et al., 2009). In Hodgson, the APA argued for full responsibilities for women deciding to abort a fetus, based on the woman’s ability to consider the impact and consequences of her decision. Just as it can be said that the pregnant woman in Hodgson had the abilities of sound judgment regarding the yet unborn, the youthful homicide offender, depending on the individual, should be expected to have sufficient psychosocial judgment to appreciate the enormity and irreversibility of homicide.

Negative External Influences

The Miller brief argued that adolescents are more vulnerable to external influences, namely peer pressure and their family and neighborhood conditions. The brief claimed that juveniles are more likely to commit crimes in groups and that criminal behavior was associated with exposure to delinquent peers. Such argument that adolescents commit crimes with peers rather than alone, as opposed to adults, has been debunked as a myth.

For example, a large-scale study of 466,311 arrests from seven states showed that solo offending accounted for the bulk of offending among all age groups, including juveniles (Stolzenberg & Alessio, 2008). Furthermore, records of violent victimization published by the Bureau of Justice Statistics reveal that violent crime involving co-offenders is more common in the adolescent age group (12–17) than among young adults (18–29), but still well below the frequency of committing such offenses alone (Snyder et al., 2020). Myers et al. (1995) studied 25 juvenile homicide offenders, in crimes relating to a variety of motives, and found that in 68% of cases, the offender acted alone. Shumaker and McKee (2001) compared 30 juvenile homicide offenders with 62 other juveniles charged with non-homicide violent offenses and found that juvenile homicide offenders were more likely to act alone. Peers may be involved in the antisocial behavior surrounding homicides but are often tangential or incidental to the homicidal conduct (Pizarro, 2008).

Closer review of the studies informing the assertions that juveniles are more prone to peer pressure reveals the claims to be without adequate scientific foundation. None of these studies focus on delinquents who have perpetrated major crimes such as homicide; meaning these studies lack external validity.

For example, in the cited Albert et al. (2013) study, the participants were 40 students divided into three small groups. Each participant completed the Stoplight game in either an alone condition where there were no observers, or a peer condition, where participants were told that their friends were going to observe their actions from a monitor. The Stoplight game is a computerized exercise, similar to a videogame, in which a player is tasked to maneuver a car to a distant location in the shortest time possible while moving through the yellow lights of 20 intersections. At best, peer pressure was inferred by a proxy measure of a participant thinking that a friend was observing them. The study is therefore artificial and without replication of real-world offending, real-world peer or gang dynamics, and real-world homicide perpetration.

The above argument from the Miller brief that peer influence causally impacts adolescent offending is, moreover, contradictory. According to the latest statistics, homicide peaks at around ages 20–21 (Snyder et al., 2020); yet homicide is far less frequent among younger adolescents, when resistance to peer influence, by the account of their staged experiments, is less. Steinberg and Monahan (2007) concluded that the ability to resist peer influence is at adult levels by age 18 even before the peak period of homicide offending. Monahan et al. (2009) compared resistance to peer influence among offender groups with different criminal trajectories and found no difference in resistance to peer influence between the group that desisted from crime and the group that persisted. The findings show that criminal behavior is not a consequence of one’s inability to resist peer influence.

Additional evidence demonstrating the overemphasis of peer influence arguments as it relates to homicide emerge from a large Pittsburgh public school sample of 1,517 children (Pittsburgh Youth Study; Farrington et al., 2018). Only 37 children went on to eventually be arrested for homicide (Loeber & Ahonen, 2013). Of the many factors accounted for, peer delinquency had the fifteenth largest effect size. Ultimately, peers are often the downstream product of antisocial traits and interests; that is, those with antisocial proclivities seek out antisocial peers (Gottfredson & Hirschi, 1990; Moffitt, 2018).The Pittsburgh Youth Study demonstrated that, of the features and history associated with youth eventually arrested for homicide, the effect of having a favorable attitude toward delinquency, of carrying a gun, of having used a weapon, and of one’s own delinquency was stronger than that of having delinquent peers (Loeber & Farrington, 2011; Loeber et al., 2005). Of the 37 in the sample arrested for homicide, 19 had committed at least 40 serious delinquent acts prior to committing a homicide (Loeber & Ahonen, 2013). Research has also revealed that youths’ association with deviant peers is related to other factors such as neighborhood instability and disorganization as well as ineffective parenting (Chung & Steinberg, 2006).

In a more recent study of 64,639 juveniles in Florida referred to the justice system for at least one misdemeanor or felony, association with antisocial peers had a negative relationship with risk for violent and sexual offending (Miley et al., 2020). The interaction between involvement with deviant peers and criminal offending is therefore neither robust nor straightforward.In contrast to the Miller brief’s theoretical postulating, there have been large scale studies of youth who were ultimately arrested for homicide to ascertain risk factors for this outcome. In particular, the strongest risk factors for homicide (that do not relate to criteria of conduct disorder) are living in a disadvantaged neighborhood in childhood and being born to a teenage mother (Farrington & Loeber, 2011). Additional findings from the Pittsburgh Youth Study show that a history of violent offending predicted future homicide arrest for boys, but so did property offending, indicating future homicide offenders were already versatile offenders at a young age. Peer delinquency had far less of an impact, as noted above (Farrington et al., 2018).

In particular, the peer delinquency finding highlights the disconnect between Steinberg’s work and the body of research on actual homicide offenders. Loeber et al. (2005) found that homicide offenders were more likely to sell hard drugs or marijuana, to have been suspended, to be African-American, to be from a broken family, to live in a bad neighborhood, to have a disruptive behavior disorder, to have a favorable attitude toward delinquency, and to carry a gun. Baglivio and Wolff (2017) studied records of 5,908 juveniles who were first arrested by age 12. In the 24 individuals who were ultimately arrested for homicide or attempted homicide by age 18, the researchers found that household mental illness, specifically in a parent or siblings, along with a history of self-mutilating behaviors, and earlier severity of measured anger and aggression predicted a greater likelihood of arrest for homicide or attempted homicide. As in the Pittsburgh Youth Study, African-American males were more likely to be arrested for homicide by age 18.

However, the predictive value of race is reduced after other socioeconomic risk factors are controlled (Farrington & Loeber, 2011). In the Pittsburgh Youth Study, African-American youth were also exposed to more risk factors during their childhood, including socioeconomic neighborhood disadvantage and maltreatment (Loeber et al., 2017).

Peer delinquency did not predict future homicide offending in this large group, and neither did youth substance use. Consistent with this data, a recent large sample study explored motives for offending among youth at least 16 years old. Only 16% reported that their primary reason for any type of offending was for peer status (Craig et al., 2018). There is no study or data to demonstrate that, with any regularity, the mere peer observation endorsed by the research underlying the Miller brief, routine peer influence, or even peer pressure causes a person to commit a homicide they would not otherwise have committed.

Capacity for Reform and Change

A consistent theme throughout the Roper, Graham, and Miller briefs is that adolescents do not yet have a fully formed character, meaning they are more capable of change. Specifically, Miller indicated that personality traits are still changing and identify formation is incomplete until at least the early 20s. In actuality, research presents a much more ongoing and non-linear picture of personality and identity development. Studies have shown that after age three, there is continuity of personality traits in childhood and adolescence, and the level of stability increases through adolescence and young adulthood. However, personality traits continue to change throughout adulthood until sometime after age 50 (Helson et al., 2002; Roberts & Mroczek, 2008). In fact, Caspi et al. (2005) indicated that most personality changes occur in young adulthood, and not adolescence, and some traits continue to develop well beyond “typical age markers of maturity” (p. 468).

In a meta-analysis of 92 longitudinal studies, Roberts et al. (2006) reported that the most active period of personality change is between ages 20 to 40. The authors opined the degree of this change was likely due to life experiences such as finding a marital partner, starting a family, and establishing a career. However, they also found that personality does not reach a point of stability at a specific age or developmental period. Rather, personality change can occur at any stage in life in response to age-graded expectations and roles, as opposed to a biological graduation akin to puberty or menopause.

Risk Factors and Recidivism

The Miller brief further argued that research could not reliably predict who might offend in the future, even among adolescents convicted of the most serious crimes. That is factually incorrect. Decades of research have illuminated our understanding of risk factors for violent and antisocial behavior, as well as recidivism risk for those who do offend. For example, Moffitt’s (1993) developmental taxonomy posits three broad groups of juvenile offenders: 1) abstainers from antisocial conduct, 2) persistent offenders who continue to break the law, often with serious and violent offenses, and 3) those whose offenses are typically limited to adolescence and are trivial or benign in nature. The Miller brief downplays that the majority of violent offenses are committed by life-course persistent offenders (DeLisi, 2005; Farrington et al., 2013; Moffitt, 2006; Moffitt et al., 2001; Monahan et al., 2013; Piquero et al., 2003). This subset constitutes approximately 10% of multiple cohorts that were followed for decades across a litany of methodologically high-quality longitudinal research studies. Research has demonstrated life-course persistent offenders to exhibit early onset of childhood antisocial behavior, to be responsible for the majority of offending, and continue throughout life (Moffitt, 2018). Compared to other adolescents, those who commit homicide exhibit much more severe psychopathology, more severe antisocial conduct, and are much less likely to mature out of their pathological behavior (Caudill & Trulson, 2016; Hagelstam & Häkkänen, 2006; Myers & Scott, 1998).

The Miller brief, apart from focusing attention on adolescents who are primarily petty offenders, admonishes that the ability to predict future criminality is limited. This claim is false. Many studies have examined recidivism for homicide offenders, informing our understanding of future risk. Vries and Liem (2011) examined 137 homicide offenders who were accused between the ages of 12 to 17 in the Netherlands. Approximately half of the offenders had a criminal record, with an average of six prior offenses, and were 15 years old on average when they committed their first crime. After release, 59% percent of this sample criminally recidivated, with about 3% of the subsequent crimes being homicide or attempted homicide. Male gender was the most significant predictive variable, while maintaining relationships with delinquents predicted faster recidivism. Age at first offense, age at homicide, and lack of self-control also impacted recidivism.

Likewise, in a sample of 221 juvenile homicide offenders who did not receive the adult portions of blended sentences, 58% of the offenders committed a felony within ten years. Longer sentencing was associated with lower recidivism. The findings also demonstrated the higher likelihood of teenagers who kill to criminally recidivate if released as a juvenile disposition, especially if they have had disruptive behavior, or assaulted staff while in custody (Caudill & Trulson, 2016). In New Jersey, a study of 336 homicide offenders showed that among the groups studied (homicide precipitated by argument; homicide during a felony; domestic homicide; and vehicular homicide), homicide committed during a felony had the worst prognosis for criminal and violet recidivism (Roberts et al., 2007). In yet another study of 1,804 serious and violent delinquents, gang affiliation had a huge impact on re-arrest, even more so than poverty, physical abuse, and mental illness (Trulson et al., 2012).

Adverse childhood experiences (ACEs) are known to influence poor health outcomes, including violence perpetration. These include physical abuse, sexual abuse, emotional abuse, physical neglect, emotional neglect, household substance abuse, household mental illness, household member incarceration, parental separation/death, and family violence/domestic abuse (Felitti et al., 1998; Miley et al., 2020). Research has demonstrated that an ACE tally of five or more such events creates a 345% greater likelihood of early onset and persistent offending, specifically a first arrest at age 12 or under and an average of eighteen arrests by age 18 (Baglivio et al., 2015). Each additional ACE results in a 35% increase in likelihood of serious, violent, and chronic offending (at least 5 arrests, at least one violent) by age 18 (Fox et al., 2015).

The Pittsburgh Youth Study cohort showed persistent offenders were distinguished by a history of poor child rearing, large family size, low parental interest in education, and high parental conflict (Farrington, 2019). In a systematic review of studies of homicide offenders under 21, the cumulative relationship of a number of risk factors, including executive function problems, illness, epilepsy, violent family members, criminal family members, contact with the court, low academic achievement, gang/group membership, and weapon possession, distinguished those who committed homicide from non-offenders (Gerard et al., 2014). More recently, a study of 621 serious and violent juvenile offenders in Texas some of whom were homicide offenders showed that criminal justice system history, such as prior adjudications, disciplinary conduct while incarcerated, and length of incarceration predicted post-release felony recidivism, but childhood ACEs did not (Craig et al., 2020).

Notwithstanding the Miller brief’s discounting of prognostic research, decades of study demonstrate that behavioral assessments in early childhood have enduring and significant predictive value. Drawing on a birth cohort of 1,037 subjects from the Dunedin Multidisciplinary Health and Development Study, multiple studies by Moffitt and colleagues identified risk factors for life-course-persistent offending including under-controlled temperament, neurological abnormalities, delayed motor development, low intelligence, poor reading ability, poor neuropsychological testing performance, hyperactivity, slow heart rate, parenting risk factors, family conflict, experiences of harsh and inconsistent discipline, changes in primary caregiver, low socioeconomic status, and rejection by school peers (Jeglum-Bartusch et al., 1997; Moffitt, 1990, 2006; Moffitt & Caspi, 2001; Moffitt et al., 1994).

Variance in childhood temperament, including effortful control and negative emotionality, is believed to impact antisocial behavior across the lifespan (DeLisi & Vaughn, 2014). In a meta-analysis of 55 studies, self-regulation (the ability to exercise control over one’s thoughts, feelings, and behaviors) measured around age 8 was associated with poorer outcomes around ages 13 and 38, including aggressive and criminal behavior (Robson et al., 2020). In fact, even infant socialization has been shown to have impacts on self-control, which further impacts reactive-overt and relational aggression at ages 8.5, 11.5, and 15 (Vazsonyi & Javakhishvili, 2019).

The predictive importance of child history in the criminal context, both positive and negative, is further reinforced by a 1997 study. Drawing from Moffitt’s Dunedin study cohort, they studied 539 males with a variety of measures, which included teacher reports and later, convictions for violent offenses, as well as personality assessments. The results showed that childhood antisocial behavior was more associated with later convictions for violent offenses, while adolescent-onset antisocial behavior was more likely associated with convictions for non-violent offenses (Jeglum-Bartusch et al., 1997). In fact, Moffitt (2006) posited that childhood-onset antisocial behavior is almost always predictive of poor adjustment in adulthood.

Another study predicting risk from early years researched African-American families in Philadelphia as part of the Pathways to Desistance project. Child measures of school discipline and low IQ predicted a more serious offending career (Piquero & Chung, 2001). The same cohort demonstrated that neuropsychological test scores at ages 7 and 8 and at ages 13 and 14 predicted life-course persistent offending at age 39. Cognitive ability was associated with the likelihood of onset of delinquency, early onset of delinquency, and persistence in delinquency over the 18 years studied, net statistical controls, in this sample of inner-city urban youth (McGloin & Pratt, 2003). The fact that this sample was entirely African-American controls for concerns about any ethnic bias in testing, because that bias would affect all participants. Only 1.8% of the study sample, however, included homicide offenders. Research on this subgroup showed that lower IQ, previous exposure to violence, neighborhood disorder, and gun carrying were risk factors for committing homicide (DeLisi et al., 2016).

Using official criminal records for participants in the Pittsburgh Youth Study cohort, Ahonen et al. (2016) distinguished three risk factors that separated those arrested for homicide from other violent offenses—being African-American, conduct problems on initial screening, and favorable attitude to substance abuse. Lynam et al. (2009) found that psychopathy at age 13 was predictive of criminal convictions at age 26, even controlling for race, family structure, socioeconomic status, neighborhood socioeconomic status, physical punishment, inconsistent discipline, lax supervision, positive parenting, impulsivity, verbal IQ, attention deficit hyperactivity disorder, and conduct disorder. Juvenile psychopathy accounted for 6–7% of the variance in arrests and convictions occurring in early adulthood, well above the variance accounted for by any other variable in the study. A later study demonstrated that childhood psychopathy was associated with earlier, more extensive, and more violent criminal careers in a federal correctional sample (DeLisi et al., 2020).

The likelihood that a homicide offender is psychopathic is significant, even if far from universal. Fox and DeLisi’s (2019) meta-analysis of 29 samples from 22 studies including 2,603 homicide offenders found a strong overall association between psychopathy and homicide. Moreover, the effect sizes, or meaningfulness of this association, were stronger for more severe manifestations of homicide including sexual, sadistic, serial, and multi-offender homicides. Lynam et al. (2007) found that 29% of those who scored in the top 5% of psychopathy at age 13, those with the most psychopathic qualities, were diagnosed as psychopaths at age 24. That same study also found that some traits more likely persisted, even if they were interpreted and diagnosed differently from psychopathy in adulthood.

Interpersonal callousness during adolescence has been associated with higher levels of antisocial personality features in early adulthood (Pardini & Loeber., 2008). Youth with marked callous and unemotional traits are most at risk to become the adult offenders who perpetuate severe and chronic acts of violence (Reidy et al., 2015). Interpersonal callousness in youth is a risk factor for developing conduct disorder and has been found to be uniquely associated with a pattern of persistent and violent offending that continues into at least the early 30 s (Pardini et al., 2018), and is associated with poorer treatment outcomes (Fairchild et al., 2019).

Clearly, there is ample research evidence to support the identification of risk factors for recidivism in homicide offenders. Like all complex human behaviors, homicide results from multiple factors, each with varying biological, psychological, social, and environmental influences. The dual systems model, apart from being flawed and resting on tenuous data and invalid conflating, ignores the already proven relationship of a host of factors to violent and persistent crime. What has not yet been measured and cannot be isolated is whether a person who has chosen homicide, by virtue of character, will choose a prosocial aspect of their identity after imprisonment.

Psychosocial Immaturity and Brain Development

The Miller amicus brief’s final argument cited neurological studies that suggested there are changes in brain function and structure during adolescence, specifically the prefrontal cortex, and connections from the prefrontal cortex to other areas of the brain. The brief argued that these theorized changes during adolescence impact executive functions, which include planning, motivation, judgment, and decision-making. Executive functions enable a person to engage successfully in independent, purposeful, self-directed, and self-serving behavior (Lezak et al., 2012). However, the Miller brief takes a number of untenable leaps in neuroscience interpretation and overstatements of what has and even can be demonstrated by neuroimaging.

For example, the Miller brief cites research showing decline in gray matter (nerve cell bodies) after puberty, in support of the assertion that neuroanatomical changes have direct bearing on adolescent homicide. However, that study examined only 13 children with a median IQ of 125 and did not control for right or left-handedness (Gogtay et al., 2004). Additionally, many areas of the brain were sampled, and the study did not control for socioeconomic factors or education. One cannot draw conclusions about homicide offenders from this study. Research has demonstrated that gray matter in the frontal lobe increases during pre-adolescence, with a maximum size at approximately 12 years for males and 11 years for females. Volume decreases in the years to follow, and after adolescence (Giedd et al., 1999). The brain’s gray matter is involved in innumerable and complex behavioral processes (Zatorre et al., 2012), but there is no identified linkage between gray matter and the components of a behavior as complex as homicide.

White matter, which is composed of the insulating sheaths that cover many nerve fibers, continues to increase from birth until the late teens or early 20 s (Mills & Tamnes, in press). Changes in late adolescence have been correlated with increased cognitive efficiency for complex attention, working memory, verbal fluency, visual construction ability and recall (Bava et al., 2010). The increase in volume is less among females relative to males. Like gray matter, the volume of white matter does not correlate to homicide rates and risk because females, in whom homicide is less frequent, nevertheless have less pronounced increases (Giedd et al., 1999). Brain developmental activity during adolescence affects cognitive abilities to the degree that white matter microstructures are more advanced throughout development. Executive and cognitive functions, including response inhibition and working memory, continue to mature into adolescence, at which time performance is at an adult level (Simmonds et al., 2014).

The findings from fMRI studies have also been overinterpreted. In a study of financial risk taking, researchers administered an fMRI to adolescents age 9–17 and adults age 20–40 taking a computerized decision-making task involving probabilistic money outcomes. The adults exhibited greater activation of the orbitofrontal/ventrolateral prefrontal cortex and anterior cingulate cortex than adolescents when making risky selections. But adolescents and adults performed similarly on the decision-making task as both groups made similar risky choices and won similar cumulative amounts of money (Eshel et al., 2007). Therefore, although the researchers noted variations in brain activation in selected structures, there were not significant differences between adults and adolescents in actual performance on this decision-making task that incorporated risk. This is a vivid rejoinder to suggestions that adolescents are not capable of refraining from homicide because structural changes taking place in the brain mean that they do not have the necessary self-control functions to regulate homicidal actions. Studies such as this show that they rely upon other neural pathways to achieve the same end.

With regard to brain structures that lie beneath the level of the cortex (or subcortical), longitudinal studies of children and adolescents have found that during the second decade of life, the caudate, putamen and nucleus accumbens decrease in volume at a near linear rate but the amygdala, thalamus, and pallidum demonstrate a non-linear increase in volume (Mills & Tamnes, in press). The amygdala is implicated as an engine of fear and anger, and explosiveness (Davis & Whalen, 2001), however, these abnormalities are also found in subjects who are not reactively violent, non-violent offenders, and non-violent non-offenders (Hardcastle, 2015).

Neuroimaging studies cited in the briefs rely on cross-sectional, rather than longitudinal data, making it impossible to derive developmental conclusions. Longitudinal studies allow researchers to study the long-term impacts of individual characteristics over time to make causally relevant conclusions for an individual examinee (Johnson, 2010). Furthermore, cross-sectional data gathered as groups glosses over pivotal individual differences to create ecological fallacy – making causal inferences from group data to individuals (Robinson, 1950).

Each individual brain responds to different stimuli leading to homicide in a unique way. Group tendencies, therefore, do not inform how individuals employ judgment, choices, reasoning, self-control, or reactions to peers (Hardcastle, 2015). This is especially problematic in a legal context, where observations about groups are applied to individuals although group findings only provide minimal support for individual determinations (Poldrack et al., 2017). Moreover, neither cross-sectional imaging studies nor longitudinal imaging studies have shown correlations with everyday behavior, let alone the complexity of homicide. Luna and Wright (2016) further indicate that it is not possible to draw causal links between brain imaging data and criminal behavior, as most research has utilized simple tasks that have no or little bearing on criminal behavior.It would appear from reading the Miller brief that science has concluded that different patterns of brain activation map to specific behaviors. However, this notion is not true (Pfeifer & Allen, 2012; Silber, 2011), and there is acknowledgment that the limitations of this research is growing (Johnson et al., 2009; Pfeifer & Allen, 2012; Poldrack, 2011). Sherman et al. (2018) acknowledged that future work with larger samples would be needed to appropriately conclude that there is a correlation between risk taking and activation in areas of the brain.

Research claiming to find differences in limbic prefrontal (orbitofrontal and medial prefrontal) region activity between children, adolescents, and adults relied on tasks that are in no way representative of the real world, such as pushing a button when happy, fearful, or calm faces appear (Dreyfuss et al., 2014). Reliance on research with fearful and happy faces for conclusions about homicide and violent crime vividly illustrates the degree to which theorists and advocates overreach within the public policy arena. Perhaps more importantly, a recent meta-analysis concluded that task functional fMRI measures (e.g., the Stroop test and face-matching emotion-processing tasks) are not reliable, with poor intraclass correlations and test–retest reliabilities. The authors concluded that task-fMRI measures should not be used for identifying brain biomarkers or for studying differences between individuals. Rather, they recommended an overhaul of research in this arena, with larger samples and new tasks that rely on more natural stimuli (Elliott et al., 2020).The results of brain scanning in general as applied to human behavior must be viewed with judicious caution (Shermer, 2008). The Miller brief asserts that brain activation in fMRI studies is illustrative of processes involved in violent offending. However, in an article published around the time of the Miller brief, Steinberg himself indicated that neuroimaging studies about judgment and decision-making needed to be complemented by research in the real world (Albert & Steinberg, 2011). Three years before Miller, Steinberg (2008) indicated that “much of what is written about the neural underpinnings of adolescent behavior – including a fair amount of this article – is what we might characterize as ‘reasonable speculation’” (p. 81) and urged readers to exercise caution when drawing inferences from brain imaging research.

Longitudinal studies, such as the Adolescent Brain Cognitive Development study are now finally being conducted with larger sample sizes, to learn about changes over time within the same individuals (ABCD Study, n.d.). This research will be better positioned to provide a reliable and valid understanding of how the brain changes structurally. However, these changes still must be correlated with behavior such as homicide, controlling for relevant variables, to yield generalizable results.

Discussion

There is no doubt that the Miller brief and the United States Supreme Court decision citing to it have had far-reaching impact on the criminal justice system and sentencing for juvenile offenders. However, the science touted by the Miller brief, as reviewed above, is rife with contradictions, over-simplifications, non-generalizable findings, methodological flaws and constraints, and leads to ecological fallacy. Relying on these research studies as a basis for significant Court and public policy decisions ignores a plethora of research showing that many juveniles have no different judgment, decision-making risk appraisal, and impulse control than adults in general. Moreover, risk factors for violent offense and re-offense have been readily identified in the literature.

The United States Supreme Court in Miller disallowed mandatory sentencing of life without parole in order to allow for courts to individually assess a homicide defendant, their crime, their background, and their subsequent course. The available literature demonstrates adolescent homicide offenders evince a number of psychosocial or developmental deficits, drug abuse, mental illness, or behavioral disorders. This is no different from those who commit homicide at older ages. Some killers may be at higher risk for persistent offense in the community, and even a higher risk to commit homicide.

Much can be learned about an individual’s background, history, and personality through careful interview of the defendant and query of collateral informants, to inform potential risk. Imposing a blanket restriction on life sentencing for juvenile homicide offenders is incompatible with the lessons of research to date that recognize individual differences and indeed, the Miller opinion itself. What then, is the correct approach for assessing maturity in juvenile homicide offenders?

Criminal Maturity and Homicide

The most salient diagnostic data informing a criminal defendant’s maturity or immaturity is history (Kruh & Brodsky, 1997). Two essential components of history are details of the crime and personal background. Fully accounted and corroborated history yields a reliable and valid individualized assessment (APA, 2013; Heilbrun et al., 2003). The pertinent details of the crime’s antecedents, the specifics of the crime, the defendant’s relatedness to the victim, other factors influencing the criminal offending, and the crime’s aftermath provide core data necessary for individualized assessment (Heilbrun et al., 2004). The offender’s background, likewise instrumental to individual assessment, derives from developmental, scholastic, social, interpersonal, trauma, antisocial history, medical, psychiatric, drugs, alcohol, and vocational domains (Grisso, 2003, 2013; Heilbrun et al., 2009). The potential for errant judgment or bias is reconciled by the quality and quantity of history about the crime and the defendant’s background (APA, 2013; Heilbrun et al., 2003). Information is corroborated and validated by videotaping the interview and interviewing collateral sources (Borum et al., 1993).

Standardized and validated psychological and/or neuropsychological testing supplements history with additional objective data but does not replace the need for that historical foundation. If history, behavior, and neuropsychological testing illustrate deficits associated with brain imaging, then the imaging enriches the evaluation with more precise understanding (Raine, 2014). Questions of criminal maturity rely upon human evidence, which has the capacity to present itself and then be refashioned to manage the impression of the examiner and court. Given the legal consequences, managing the impressions of a forensic examiner is a necessary survival skill for any criminal defendant. It is also a major challenge to the reliability and validity of human evidence (Rogers & Bender, 2003).

Accounting for history protects from forces that contaminate and undermine the authenticity of human evidence and its fidelity to the facts (APA, 2013). Because history is paramount, the understanding of the individual defendant should be informed on the case investigative level. Full access to the smartphone of the defendant and other related actors, for example, provides abundant, critically relevant and valid contemporaneous data uncorrupted by adversarial positioning and is the most diagnostic source material available to emotional, cognitive, and behavioral understanding today (Coffey et al., 2018).

Individualized assessment that probes the presence or absence of characteristics relevant to motive and the criminal behavior is the hallmark of forensic psychiatry and psychology practice. Such an evaluation involves applying established scientific understandings to confirmed history, behavioral evidence, testing data, the interview, and other background and collateral information, such as that yielded from witness interviews and smart phone recordings and transmissions. To that end, excluding a probe of motive and the antecedents of a criminal act necessarily downgrades the validity of an exam and biases it by turning away from the very basis for examination of the defendant. Such practice is akin to the cardiologist who refuses to perform an electrocardiogram for chest pain and insists on focusing only on potential gastrointestinal, musculoskeletal, hematological, and other sources of chest pain. The behavioral sciences operate as medical disciplines do, without investment in the outcome.

A forensic scientist’s responsibilities do not allow the presumption of motive or biases about what one believes adolescents are capable of, but mandates deconstruction of the crime, the killer, and a homicide’s antecedents. Case facts and evidence are the basis for any scientific conclusion. Theory is accountable to case facts and evidence, or ultimately spoils, unused, like other items abandoned for lack of scientific nutritional value. Sentencing decisions for juvenile homicide offenders should be made based on a thorough and holistic assessment of the offender, not based on a blanket assumption of immaturity due entirely to the offender’s age.