Abstract

This research paper explores the ethical considerations in using financial technology (fintech), focusing on big data, artificial intelligence (AI), and privacy. Using a systematic literature-review methodology, the study identifies ethical and privacy issues related to fintech, including bias, discrimination, privacy, transparency, justice, ownership, and control. The findings emphasize the importance of safeguarding customer data, complying with data protection laws, and promoting corporate digital responsibility. The study provides practical suggestions for companies, including the use of encryption techniques, transparency regarding data collection and usage, the provision of customer opt-out options, and the training of staff on data-protection policies. However, the study is limited by its exclusion of non-English-language studies and the need for additional resources to deepen the findings. To overcome these limitations, future research could expand existing knowledge and collect more comprehensive data to better understand the complex issues examined.

1. Introduction

Fintech businesses have used big-data analytics and artificial intelligence (AI) to evaluate enormous volumes of data from several sources and to make autonomous suggestions or judgments (Li et al. 2022; Yu and Song 2021). Fintech organizations may provide more individualized financial services, increase operational effectiveness, and cut costs by integrating AI and big data (Ashta and Herrmann 2021). ChatGPT, as an AI tool, plays a crucial role in this context by assisting in the analysis of big data and enabling fintech organizations to provide more personalized financial services, enhance operational effectiveness, and reduce costs (George and George 2023). However, the integration of AI and big data also brings forth ethical and privacy concerns, encompassing issues of bias, discrimination, privacy, transparency, justice, ownership, and control (Hermansyah et al. 2023). The complexity of the financial system and the internal data representations of AI systems may pose challenges for human regulators in effectively addressing emerging problems (Butaru et al. 2016). Therefore, an understanding of the ethical implications of fintech, including the responsible use of tools such as ChatGPT, is paramount in fostering customer trust and confidence (George and George 2023).

This study aims to investigate the ethical issues surrounding fintech, particularly those in which big data, AI, and privacy are concerned. It focuses on resolving data-security and privacy issues while examining the intricate interplay between fintech and customer trust. A summary of the best practices and approaches for adhering to data-privacy rules and regulations, as well as corporate digital responsibility for boosting financial success and digital trust, are also provided by this research. The exploration of the ethical implications of fintech and how they affect digital trust, customer acceptance of fintech services, and how to earn customers’ confidence are the driving forces behind the study.

This study’s objectives strongly emphasize the value of safeguarding customer data, calling for firms to gather and utilize customer data responsibly, uphold reliable data-security measures, use encryption techniques, and routinely evaluate and update their data-protection policies. Organizations must be transparent about their data-collection and -usage processes, allowing customers to opt out of data collection and use and follow data-protection laws and regulations. Companies must also ensure that their data sets are diverse and represent their customer base to prevent discriminatory practices. Additionally, organizations must provide their staff with appropriate training related to customer-data protection and hold them accountable for following established policies and procedures. Therefore, this research paper investigates the ethical implications of the integration of big data and AI in the financial sector. The paper addresses the following research questions: (1) What are the ethical implications of the integration of big data and AI in the financial sector, and how can issues such as bias, discrimination, privacy, transparency, justice, ownership, and control be addressed? (2) How do data-privacy and -security concerns affect customer trust in fintech companies, and which strategies can be implemented to resolve these issues? (3) What are the best practices and approaches for adhering to data-privacy rules and regulations in the digital finance industry, and how can organizations ensure compliance with data-protection laws and regulations? (4) What is the impact of the corporate digital responsibility (CDR) culture on financial performance and digital trust, and which indirect performance benefits, such as customer satisfaction, competitive advantage, customer trust, loyalty, and company reputation, are associated with it?

The study’s findings suggest that inadequate internal controls are leading causes of fraud and asset misappropriation in firms, and millennials are more vulnerable to privacy risks regarding online banking due to their significantly lower level of financial knowledge than older generations. Studies have also demonstrated that businesses with a corporate digital responsibility (CDR) culture benefit from indirect performance effects, such as customer satisfaction, competitive advantage, customer trust, loyalty, and company reputation.

This paper contributes to the literature by examining the ethical and privacy considerations associated with the intersection of big data, AI, and privacy in the digital finance industry. It considers the complex relationship between fintech and customer trust and provides best practices and strategies for organizations to ensure compliance with data-protection laws and regulations. This study acknowledges the importance of digital trust in the adoption of fintech services and explores the impact of data-privacy and -security concerns on customers’ trust in fintech companies. Finally, the study emphasizes the importance of corporate digital responsibility in enhancing financial performance and digital trust. It argues that businesses with a CDR culture benefit from indirect performance effects, such as customer satisfaction, competitive advantage, customer trust, loyalty, and company reputation.

2. Methodology

The current study employed a systematic review method to establish a reliable evidence base for recommendations to schools, teachers, and CPD providers. The systematic-review process is defined as “a scientific process governed by a set of explicit and demanding rules oriented towards demonstrating comprehensiveness, immunity from bias, and transparency and accountability of technique and execution” (Dixon-Woods 2011, p. 332). The review included empirical research published since 2005 and involved a range of approaches, including searching academic journals, library catalogs, and online databases. The search strategy incorporated specific keywords related to the research topic, such as “FinTech,” “Big data analytics,” “Artificial intelligence (AI),” “Data security and privacy,” “Corporate digital responsibility (CDR),” “Customer trust,” and “Ethical considerations.” Through this rigorous process, a total of 39 relevant studies were identified and included in the review. The systematic-review process (Figure 1) involved the following steps (Dixon-Woods 2011):

Figure 1. Systematic review process.

Each process step was documented, and choices were made as a team to ensure the evaluation was methodical. The initial step involved defining the inclusion criteria, which mandated the selection of peer-reviewed research written in English that directly aligned with the research goals. Additionally, exclusion criteria were established to exclude studies lacking authenticity, dependability, or relevance to the research objectives. These criteria underwent refinement to ensure the selection of high-quality and relevant papers for analysis. Subsequently, an extensive search was conducted across multiple databases and sources using predefined search terms and keywords. The databases utilized included Google Scholar, ACM, Springer, Elsevier, Emerald, Web of Science, MDPI, and Scopus to encompass a wide range of sources. Subject-matter experts were approached to address questions about the suitability of the search keywords, and their recommendations were integrated to ensure thoroughness and relevance.

After identifying potentially relevant studies, each study underwent an appraisal to assess its quality, relevance, and methodological rigor concerning the research questions. Various methods and techniques, such as checklists, were employed to ensure consistency and reliability during the appraisal process. The findings of the selected studies were then synthesized by organizing the summaries of research methodology, findings, and weight of evidence under thematic headings. This process facilitated the organization of findings and the identification of key themes and patterns in the literature. Each piece of research was carefully screened against the inclusion criteria, mapped, and summarized before it was synthesized into the report. The findings were appraised regarding their methodological quality and relevance to derive reliable and valid conclusions. Techniques such as statistical analysis and data visualization were employed to enhance the understanding and presentation of the findings.

Ultimately, a set of recommendations closely linked to the synthesized findings was formulated, identifying the practical implications of the research for future studies and practice. A rigorous and systematic process underpinned the research paper to ensure the inclusion of high-quality studies that directly aligned with the research objectives.

Content analysis was employed to identify the research themes and topics discussed in the literature, as well as to identify gaps. This involved analyzing the content of research papers and utilizing ChatGPT’s natural-language-processing tool to classify streams and sub-streams. Initially, a sample of research papers was uploaded to the tool for content analysis, generating a list of suggested themes and sub-themes. Subsequently, a manual review-and-refinement process was conducted to ensure their relevance to the research questions. Experts in natural language processing were consulted to validate the suitability of the tool for this analysis. A rigorous data-collection-and-analysis process was implemented to ensure high-quality and pertinent data utilization. Once the themes and sub-themes were identified, selected research findings within each theme were thoroughly reviewed to identify critical research gaps. This comprehensive process enhanced the understanding of the current state of research in the field and highlighted areas that require further investigation.

To summarize, a systematic-review methodology was employed to establish a reliable evidence base for providing recommendations to schools, teachers, and CPD providers. The methodology involved various steps, including definition of inclusion and exclusion criteria, performance of comprehensive database searches, appraisal of study quality and relevance, synthesis of findings, and formulation of recommendations. Statistical analysis and data visualization were used to present the findings, while content analysis was employed to identify research themes and gaps in the literature. The review and analysis were grounded in high-quality and pertinent data, and expert consultation in natural language processing ensured the appropriateness of the analysis tool. This study’s rigorous and systematic methodology ensured a comprehensive, transparent, and accountable review-and-analysis process. The recommendations derived from the synthesis were closely aligned with the findings, establishing practical implications for future research and practice. In essence, the methodology employed in this study aimed to provide a robust evidence base for informing individuals, organizations, and fintech providers. The utilization of natural-language-processing tools, such as ChatGPT, facilitated the efficient and effective analysis of a substantial volume of research, identifying crucial research gaps in the field.

Overall, this study adhered to a rigorous systematic approach that met high quality standards and addressed the research objectives directly. The integration of various methods, techniques, and expert consultations contributed to the study’s comprehensive nature, enhancing the findings’ reliability and validity. By following this well-structured methodology, the study aimed to provide a solid foundation of evidence to guide decision-making and future investigations in the field.

3. Literature Review

The fintech industry has witnessed significant advancements in recent years, fueled by digitalization and the integration of big-data analysis, artificial intelligence (AI), and cloud computing (Lacity and Willcocks 2016). As a result, banks and financial institutions are able to offer more convenient and adaptable services to customers through financial technology (fintech) (Malaquias and Hwang 2019). By leveraging mobile devices and other technological platforms, fintech enables customers to easily access their bank accounts, receive transaction notifications, and engage in various financial activities (Stewart and Jürjens 2018b).

One of the key drivers of the adoption of AI in the fintech sector is its ability to process vast amounts of data and extract valuable insights for decision-making purposes (Daníelsson et al. 2022). With the integration of AI and big-data analytics, fintech companies can offer personalized financial services, enhance operational efficiency, and reduce costs, thereby gaining a competitive edge in the market (Peek et al. 2014; Mars and Gouider 2017). However, the use of AI and big data in the fintech industry raises ethical and privacy concerns (Matzner 2014; Yang et al. 2022).

The intersection of big data, AI, and privacy in the fintech sector has prompted discussions on the importance of addressing ethical considerations. These considerations include bias, discrimination, privacy, transparency, justice, ownership, and control (Saltz and Dewar 2019). Ensuring fairness in decision-making processes is crucial, as biased or incomplete data inputs can lead to unfair or discriminatory outcomes that significantly affect individuals (Daníelsson et al. 2022). Transparency in data collection, processing, and analysis is also essential for maintaining customer trust and credibility (Vannucci and Pantano 2020). Moreover, the protection of personal data and adherence to data-protection laws and regulations are critical ethical considerations for fintech companies (La Torre et al. 2019).

The complex relationship between fintech and customer trust is another significant aspect that requires attention. Trust plays a pivotal role in the adoption of fintech services, particularly concerning data security and privacy (Stewart and Jürjens 2018b). Online-banking vulnerabilities and data breaches have raised concerns among customers, making them cautious about engaging in financial transactions through fintech platforms (Swammy et al. 2018). Addressing data privacy and security concerns is essential for fostering customer trust and encouraging the broader adoption of fintech services (Laksamana et al. 2022).

To bridge the trust gap in the fintech era, strategies for fostering trust in fintech companies have been proposed. One such strategy is the adoption of corporate digital responsibility (CDR), which emphasizes the responsible and ethical use of data and technological innovations (Jelovac et al. 2021). The implementation of a culture of CDR within organizations can enhance financial performance, digital trust, customer satisfaction, and reputation (Saeidi et al. 2015). By prioritizing the positive impact of technology on society and ensuring ethical data processing, fintech companies can establish and maintain digital trust (Herden et al. 2021).

Furthermore, compliance with data-protection laws and regulations is crucial in ensuring data privacy and security in the digital finance industry. The General Data Protection Regulation (GDPR), implemented in the European Union (EU), has emerged as a significant framework for data privacy and protection (Mars and Gouider 2017). The GDPR mandates that organizations handling personal data must obtain explicit consent from individuals, provide transparent information about data processing, and implement appropriate security measures. Compliance with the GDPR safeguards customer data and enhances trust and credibility in the fintech sector (Vannucci and Pantano 2020).

In addition to regulatory compliance, embracing technological solutions is crucial for effectively protecting customer data in the fintech industry. Encryption algorithms, for example, play a vital role in ensuring that sensitive information remains unreadable and secure during transmission and storage (Peek et al. 2014). By employing strong encryption techniques, fintech companies can prevent unauthorized access to customer data and mitigate the risk of data breaches. Moreover, the implementation of multi-factor authentication methods, such as biometrics or token-based systems, adds an extra layer of security to customer accounts and reduces the likelihood of unauthorized access (Yang et al. 2022).

Addressing ethical and privacy challenges in the fintech sector requires collaborative efforts among various stakeholders. Fintech companies, regulators, and consumers must work together to establish ethical guidelines, promote responsible data practices, and enhance transparency (Gong et al. 2020). Regulatory bodies play a crucial role in monitoring the evolving landscape of fintech and in adapting policies and guidelines accordingly to protect consumer rights and privacy (Castellanos Pfeiffer 2019).

Fintech companies, for their part, should adopt transparent practices, educate customers about data privacy, and provide clear opt-out mechanisms to respect individual autonomy (Swammy et al. 2018).

In conclusion, the integration of AI and big data in the fintech industry presents opportunities and challenges. While these technologies enable innovative financial services and enhanced customer experiences, addressing ethical concerns such as bias, transparency, privacy, and trust is paramount. By prioritizing the responsible and ethical use of data, complying with regulatory frameworks such as the GDPR, and adopting secure technological solutions, fintech companies can build trust, ensure customer privacy, and foster the industry’s sustainable growth. Collaborative efforts between stakeholders are crucial in creating an ethical and privacy-conscious fintech ecosystem.

4. Content Analysis

This research paper reports a content analysis of data-privacy vulnerabilities in the fintech industry. A thematic-analysis approach was utilized to categorize the collected research into three key themes. The first theme, Ethical Considerations in Fintech: The Intersection of Big Data, AI, and Privacy, highlights the significance of addressing concerns such as bias, discrimination, privacy, transparency, justice, ownership, and control in the fintech sector. The second theme, Navigating the Complex Relationship between Fintech and Customer Trust: Addressing Data-Privacy and -Security Concerns, underscores the need to address data-security and -privacy issues to cultivate customer trust in fintech companies. The third theme, Bridging the Trust Gap: Strategies for Fostering Trust in the Fintech Era, offers strategies for building trust in fintech companies by promoting corporate digital responsibility and adherence to data-privacy laws and regulations. The findings of this analysis highlight the critical role of data privacy and security in building customer trust and corporate reputation.

Furthermore, the paper suggests best practices and strategies for fintech companies to ensure data protection and security. The implications of these findings are relevant to financial firms, policymakers, and other stakeholders seeking to ensure the responsible and ethical use of big data and AI in the digital finance industry. Nevertheless, it is essential to expand on the study’s limitations, such as the exclusion of non-English language studies and the need for additional resources to deepen the findings. By collecting more comprehensive data and expanding existing knowledge, researchers can better understand the complex ethical and privacy issues associated with fintech.

4.1. Ethical Considerations in Fintech: The Intersection of Big Data, AI, and Privacy

The advancement of digitalization, supported by technical enablers such as big-data analysis, cloud computing, mobile technologies, and integrated sensor networks, is causing significant changes in how organizations operate in economic sectors (Lacity and Willcocks 2016). With increased internet and e-commerce usage, banks can now provide customers with more convenient, effective, and adaptable services (Malaquias and Hwang 2019). This has led to the utilization of financial technology (fintech) to enhance banking services through the use of mobile devices and other technological platforms to access bank accounts, receive transaction notifications, and debit and credit alerts through push notifications via APP, SMS, or other notification types. Fintech also includes mobile-application features such as multi-banking, blockchain, fund transfer, robot advisory, and concierge services, from payments to wealth management (Stewart and Jürjens 2018b).

To improve the speed and accuracy of their operations, deliver personalized services to customers, and reduce expenses, fintech companies have leveraged artificial intelligence (AI). This is a technology that replicates cognitive functions associated with humans and facilitates the processing of vast amounts of data generated from multiple sources, such as social media, online transactions, and mobile applications (Daníelsson et al. 2022). Algorithms based on AI use significant data inputs and outputs to recognize patterns and effectively “learn” to train machines to make autonomous recommendations or decisions. The implementation of AI allows fintech companies to extract valuable insights to support their decision-making processes through big-data analytics. Big data refers to an overwhelming influx of data from numerous sources in various formats, representing significant challenges for conventional data-management methods (Peek et al. 2014; Mars and Gouider 2017). In the financial market, big data has become a critical asset that is used to record information about individual and enterprise customers (Erraissi and Belangour 2018). By integrating AI and big data, fintech companies can provide more personalized financial services, improve operational efficiency, and reduce costs, enhancing their competitive edge in the market. However, this raises ethical and privacy challenges (Castellanos Pfeiffer 2019; Gong et al. 2020; Yang et al. 2022).

The use of AI in the Internet of Things (IoT) context raises ethical, security, and privacy concerns. The lack of intelligibility of the financial system and internal data representations of AI systems may impede human regulators’ ability to intervene when issues arise (Butaru et al. 2016). Systems based on AI rely on data inputs that may be biased or incomplete in determining individuals’ preferences for services or benefits, resulting in unfair or discriminatory decisions that can significantly affect individuals. Additionally, AI algorithms can potentially threaten data privacy by collecting and analyzing large amounts of personal data without individuals’ knowledge or consent, which can be used for various purposes, including targeted advertising or political profiling. These risks raise significant concerns about the potential for data misuse and the erosion of privacy. The collection and processing of large amounts of personal data can pose privacy threats, including data misuse and the erosion of privacy, if the data are not collected and stored in compliance with data-protection laws and regulations (Vannucci and Pantano 2020). The de-identification of data to protect an individual’s privacy while allowing meaningful analysis is another challenge in big-data analytics (La Torre et al. 2019). The ethical considerations surrounding big data include privacy, fairness, transparency, bias, and ownership, and control (Saltz and Dewar 2019). The protection of personal information and its use in a transparent, reasonable, and respectful manner is crucial to ensuring data privacy. This is especially important in the financial industry, in which sensitive information such as bank-account numbers, credit scores, and transaction details are involved.

Fairness in decision-making is another critical consideration when using big data and AI algorithms. As Daníelsson et al. (2022) noted, biased or incomplete data inputs can result in unfair or discriminatory decisions that significantly affect individuals. To address this issue, fintech companies must ensure that their data sets are diverse and represent their customer base. They should also implement ethical and unbiased data-processing methods to prevent discrimination and ensure fairness in decision making. Transparency in data collection, processing, and analysis is crucial for maintaining customer trust and credibility. Fintech companies should clearly and concisely explain how they collect, store, and use personal data. Additionally, they should be transparent about their algorithms and the decision-making processes behind their services. Finally, the ownership and control of personal data are critical ethical considerations that fintech companies must address. They must adhere to data-protection laws and regulations to protect the rights and interests of data owners. This includes obtaining consent before collecting and using personal data and ensuring that data are deleted securely and promptly when no longer needed.

In conclusion, the integration of AI and big data in fintech services provides significant benefits, such as improved efficiency, personalized services, and reduced costs. However, this also raises ethical and privacy concerns that must be addressed to protect customers’ rights and interests. By implementing ethical data-processing methods, ensuring transparency, and respecting data ownership and control, fintech companies can enhance their reputations and maintain trust with their customers.

4.2. Navigating the Complex Relationship between Fintech and Customer Trust: Addressing Data-Privacy and-Security Concerns

The impact of financial technology, or fintech, on the retail-banking sector has been extensively researched and debated in recent years. Yousafzai et al. (2005) found that fintech has enabled banks to provide their customers with more convenient, effective, and adaptable services through mobile-banking apps and online payment systems, which enhance overall customer experience and provide greater accessibility to financial transactions. However, the entry of fintech companies to the market and their offers of alternative financial services have sparked concerns about data privacy and security and the impact of competition on service quality (Malaquias and Hwang 2019).

The adoption of digital products and services by individuals and firms from financial institutions is heavily influenced by the perceived trustworthiness of the provider (Fu and Mishra 2022). Trust in financial institutions, mainly traditional incumbents, was eroded after the global financial crisis, leading to a shift towards fintech (Goldstein et al. 2019, as cited in Fu and Mishra 2022). However, online banking has inherent vulnerabilities that expose users to various risks (Stewart and Jürjens 2018a), and trust is critical in risky situations. Stewart and Jürjens (2018b) noted that information-security components such as confidentiality, integrity, availability, authentication, accountability, assurance, privacy, and authorization influence customers’ trustworthiness. Therefore, fintech adoption is influenced by customer trust, data security, user-interface design, technical difficulties, and a lack of awareness or understanding of the technology (Abidin et al. 2019).

Millennials, born between 1980 and 2000, considered the most influential generation in consumer spending, comprise a significant portion of online-banking customers. They are more likely to share personal information through social media and online platforms for financial transactions, increasing their risk of information misuse. In addition, millennials have a significantly lower level of financial knowledge than older generations, making them more vulnerable to privacy risks regarding online banking (Liyanaarachchi et al. 2021). Privacy in online banking is defined as the potential for loss due to fraud or a hacker compromising the security of an online bank user (Liyanaarachchi et al. 2021). With many customers finding fintech convenient and practical, customers less familiar with fintech are more skeptical and concerned about potential risks and negative effects (Swammy et al. 2018).

Many consumers are cautious about and reluctant to engage in online banking transactions due to concerns about the security of their personal information, as most data breaches and identity thefts occur in online banking environments (Stewart and Jürjens 2018a). Fintech firms must address data-security and -privacy concerns to increase client confidence and trust, in order to ensure the broader adoption and acceptance of fintech services (Laksamana et al. 2022). Therefore, banks and financial-service providers should provide transparent information about their security measures, address technical issues that may arise, and provide customer support. By addressing these factors, banks and other financial-service providers can help to build trust and confidence among their customers and encourage the broader adoption of e-banking (Moscato and Altschuller 2012).

4.3. Bridging the Trust Gap: Strategies for Fostering Trust in the Fintech Era

4.3.1. Corporate Digital Responsibility: Enhancing Financial Performance and Digital Trust through Ethical and Responsible Data Processing

New technologies have led to new social challenges and increased corporate responsibilities, particularly in digital technologies and data processing. As a result, the concept of corporate digital responsibility (CDR) has been introduced. The concept refers to various practices and behaviors that aid organizations in using data and technological innovations morally, financially, digitally, and ecologically responsible (Jelovac et al. 2021). Essentially, CDR is the recognition and dedication on the part of organizations to prioritize the favorable impact of technology on society in all aspects of their operations (Herden et al. 2021). The implementation of a culture of CDR can assist organizations in navigating the complex ethical and societal issues that arise with digital technologies and data processing (Lobschat et al. 2021). Studies have shown that businesses with a CDR culture benefit from indirect performance effects, resulting in a positive long-term financial impact, including customer satisfaction, competitive advantage, customer trust, loyalty, and enhanced company reputation (Saeidi et al. 2015). Therefore, organizations can enhance their financial performance, brand equity, and marketability (Lobschat et al. 2021). In the digital age, trust is a critical factor, particularly trust in digital institutions, technologies, and platforms, referred to as digital trust. Trust is “our readiness to be vulnerable to the actions of others because we believe they have good intentions and will treat us accordingly.” Digital trust is associated with trust in digital institutions, digital technologies, and platforms; it refers to users’ trust in the ability of digital institutions, companies, technologies, and processes to create a safe digital world by safeguarding users’ data privacy (Jelovac et al. 2021).

Creating a digital society and economy is contingent upon all participants having high trust. Digital trust is founded on convenience, user experience, reputation, transparency, integrity, reliability, and security, which control stakeholders’ data. The adoption of a culture of CDR within modern businesses and organizations is necessary to establish and maintain digital trust. This offers numerous benefits to companies, including the shaping of their future, the development and maintenance of positive, long-term relationships with stakeholders, improvements in reputation, the creation of competitive advantage, and increases in employee cohesion and productivity (Herden et al. 2021).

Corporate digital responsibility entails organizations’ comprehension of and commitment to the prioritization of technology’s positive impact on society in all aspects of their business (Herden et al. 2021). As a result, CDR contributes to digital trust through corporate reputation disclosures (CRDs). These provide information about a company’s products and services, vision and leadership, financial performance, workplace environment, social and environmental responsibility, emotional appeal, and prospects and public reputation (Baumgartner et al. 2022). Therefore, CDR acts as a signal to decrease the information asymmetry between managers and stakeholders and allows stakeholders to evaluate a company’s ability to meet their needs, as well as its reliability and trustworthiness (Baumgartner et al. 2022).

4.3.2. Ensuring Data Privacy and Security in the Digital Finance Industry: Best Practices and Strategies for Compliance with Data-Protection Laws and Regulations

The protection of individuals’ personal data through compliance with data-privacy laws and regulations is crucial in the digital finance industry. The General Data Protection Regulation (GDPR) gives individuals specific rights regarding their data, such as access to these data, the right to be informed about their collection and use, and the right to have them erased (Ayaburi 2022). Businesses must take necessary measures to protect personal data and obtain explicit consent from individuals for their processing in specific circumstances.

To ensure compliance with data-protection laws and regulations, companies must take several steps to protect against data-privacy breaches. These steps include the implementation of encryption and secure authentication protocols, de-identification techniques, and regular reviews and the establishment of data-protection policies (Beg et al. 2022). Data-governance frameworks can ensure ethical and responsible big-data management by outlining roles and responsibilities, data-handling practices, and compliance procedures (Stewart and Jürjens 2018a). Regular audits, employee training on data-protection practices, and procedures for detecting and addressing data-privacy breaches are also essential (Abidin et al. 2019). When using AI systems, careful data analysis and privacy-preserving machine-learning techniques are necessary to prevent confounding bias and illegal access to personal data (Abed and Anupam 2022).

Employee responsibility and accountability are crucial in organizations’ information security and data protection. A lack of adequate internal controls has been identified as a cause of fraud and asset misappropriation in firms (Lokanan 2014). To prevent customer-data theft, companies must prioritize employee training, carefully recruit staff, monitor customer data, oversee third-party access, use advanced technology, and prevent unauthorized access to data. The factors contributing to data theft include staff stealing customer data, noncompliance with customer-data-protection policies, a lack of knowledge about data-protection duties and procedures, and ignorance of client-data-protection procedures (Abidin et al. 2019).

Leadership is critical in ensuring data privacy and security within organizations. Leaders can address privacy concerns, build trust through effective sales and marketing strategies, and manage online banking platforms to encourage interactions that enhance confidentiality and trust, leading to a competitive advantage (Liyanaarachchi et al. 2020). To ensure data protection, leaders must obtain customers’ consent regarding their data, take concrete precautions to protect these data, and delete them when they are no longer required (Abidin et al. 2019). Additionally, leaders must ensure that staff members are trained in data-protection procedures and held accountable for following them, as well as creating protocols for identifying and responding to data-privacy breaches (Liyanaarachchi et al. 2021).

The study by Abidin et al. (2019) found that 56% of staff members at ABC Bank Services needed appropriate training related to customer-data protection for their job functions and responsibilities. This finding suggests ineffective communication channels and poor monitoring by senior management, leading to the need for a better understanding of the latest customer-data-protection policies and procedures. Overall, the primary goals of data-protection activities are to maintain a state of security and control security risks throughout an organization (La Torre et al. 2019). Organizations must comprehend the risks involved and establish who is responsible for data protection to safeguard against data breaches and maintain their clients’ trust. This requires the understanding that protecting an organization’s data involves more than determining whether privacy is a right or a commodity.

5. Results and Discussion

In this study, we conducted a content analysis of the literature to investigate the ethical and privacy considerations associated with the intersection of big data, artificial intelligence (AI), and privacy in the digital finance industry. A thematic analysis was used to identify three major themes:

5.1. Ethical Considerations in Fintech: The Intersection of Big Data, AI, and Privacy

This theme focuses on the ethical concerns raised by the integration of big data and AI in the financial sector, highlighting the need to address issues such as bias, discrimination, privacy, transparency, justice, ownership, and control.

5.2. Navigating the Complex Relationship between Fintech and Customer Trust: Addressing Data Privacy and Security Concerns

This theme examines the intricate interplay between fintech and customer trust, emphasizing the importance of resolving data-security and privacy issues. It calls for firms to gather and utilize customer data responsibly, maintain reliable data-security measures, and comply with data-protection laws and regulations.

5.3. Bridging the Trust Gap: Strategies for Fostering Trust in the Fintech Era

This theme offers strategies for building trust in fintech companies and consists of two sub-themes, which are described below.

5.3.1. Corporate Digital Responsibility: Enhancing Financial Performance and Digital Trust through Ethical and Responsible Data Processing

This sub-theme emphasizes the importance of a culture of corporate digital responsibility (CDR) in enhancing financial performance and digital trust. It highlights indirect performance benefits, such as customer satisfaction, competitive advantage, customer trust, loyalty, and company reputation.

5.3.2. Ensuring Data Privacy and Security in the Digital Finance Industry: Best Practices and Strategies for Compliance with Data-Protection Laws and Regulations

This sub-theme presents best practices and approaches for adhering to data-privacy rules and regulations, emphasizing the need to safeguard customer data, utilize encryption techniques, and regularly evaluate and update data-protection policies. It also calls for companies to be transparent about their data-collection and -usage processes and to provide their staff with appropriate training related to customer-data protection.

5.4. Results Tables

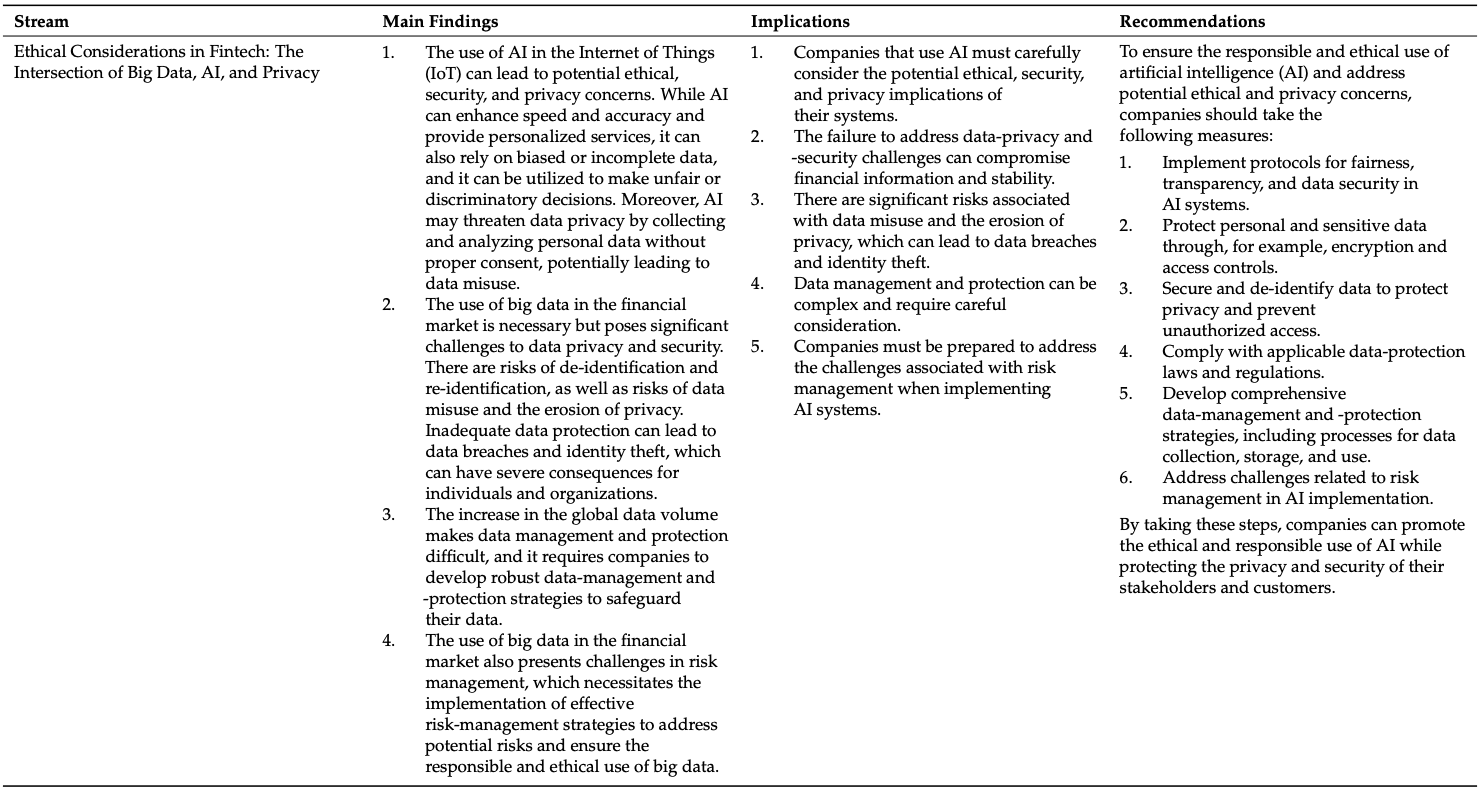

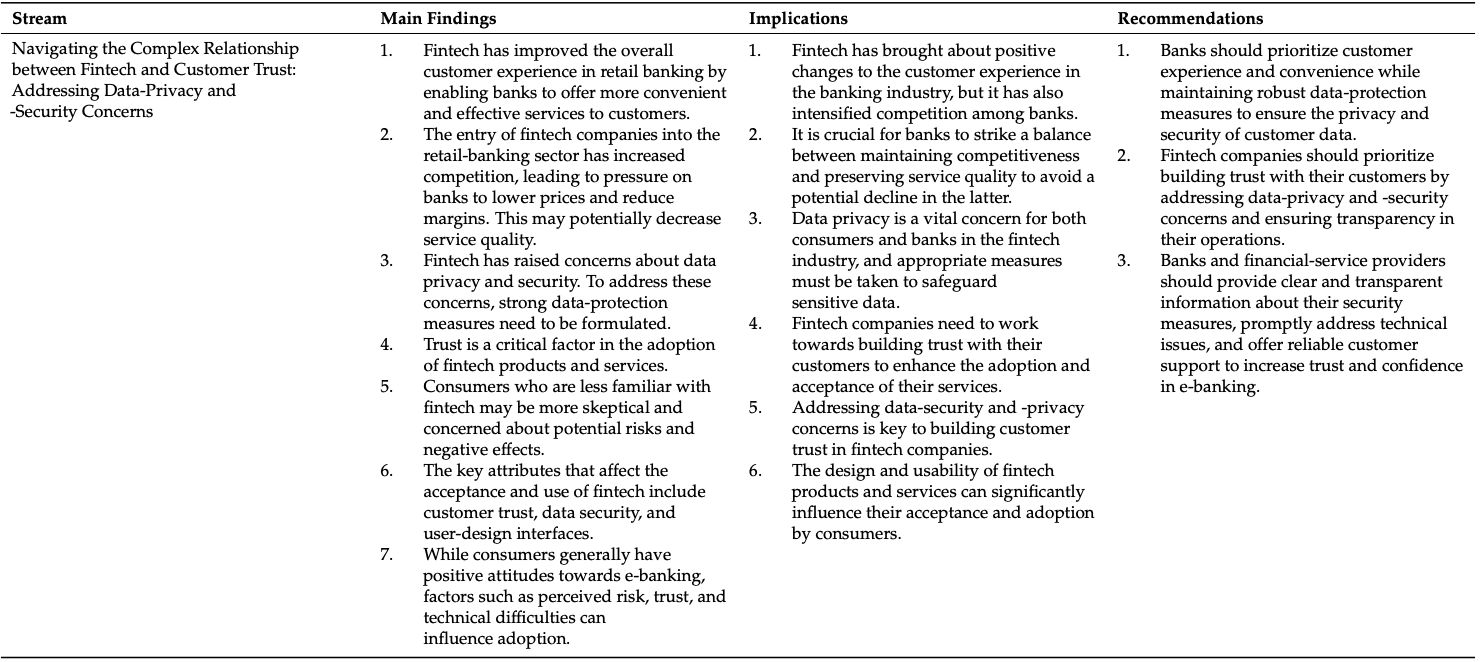

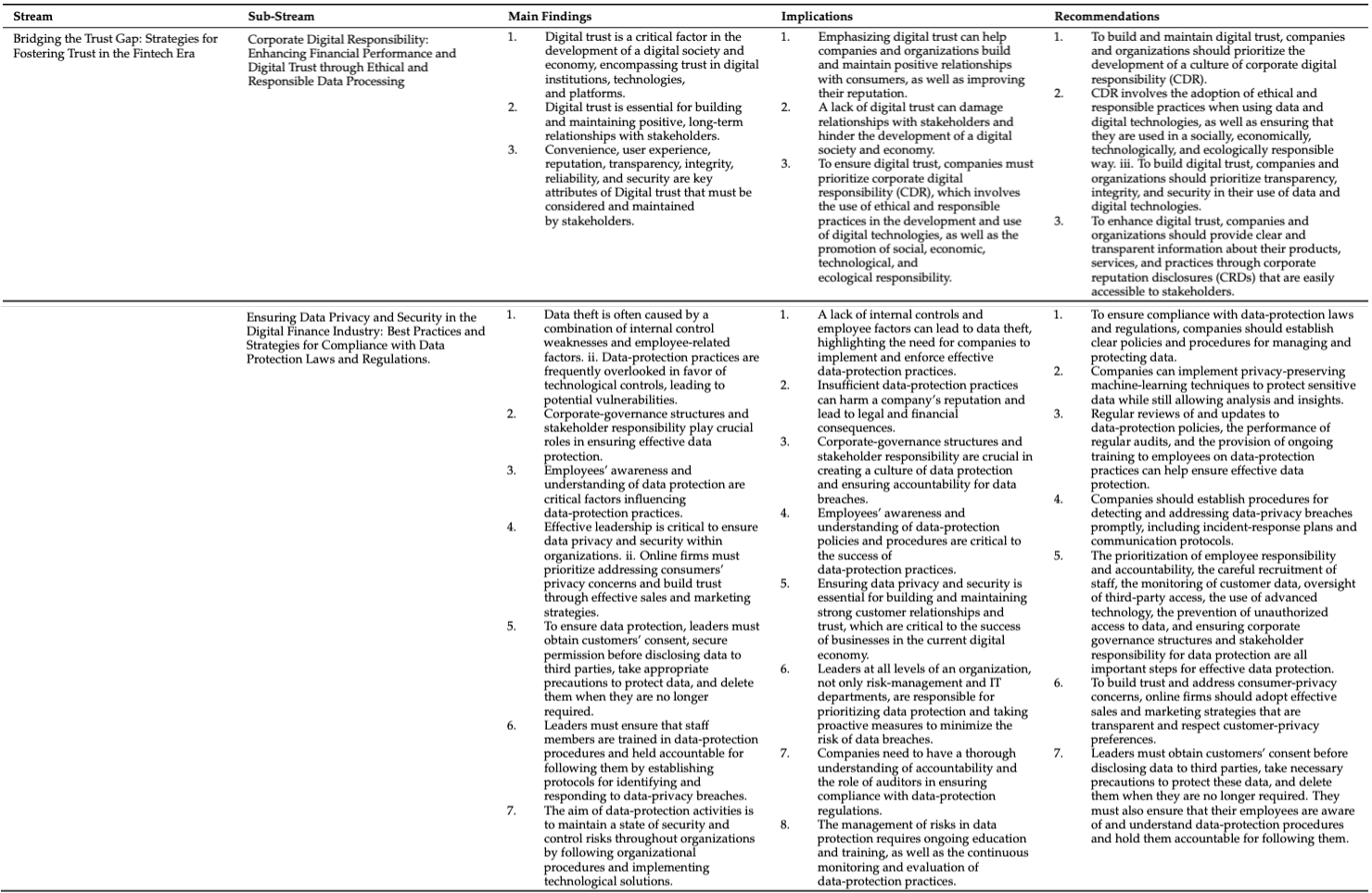

This section provides a performance analysis of the three major themes that emerged from our thematic analysis of the literature on ethical and privacy considerations in the digital finance industry. Table 1 presents the performance analysis of the first theme, Ethical Considerations in Fintech: The Intersection of Big Data, AI, and Privacy, which highlights the importance of addressing concerns such as bias, discrimination, privacy, transparency, justice, ownership, and control. Table 2 provides the performance analysis of the second theme, Navigating the Complex Relationship between Fintech and Customer Trust: Addressing Data-Privacy and -Security Concerns, which emphasizes the need to resolve data-security and -privacy issues to foster customer trust in fintech companies. Table 3 offers the performance analysis of the third theme, Bridging the Trust Gap: Strategies for Fostering Trust in the Fintech Era, which presents strategies for building trust in fintech companies, including the importance of corporate digital responsibility and adhering to data-privacy laws and regulations. Our analysis offers insights into the sector’s main privacy issues and suggestions for managing them. Our findings have implications for financial firms, policymakers, and other stakeholders seeking to ensure the responsible and ethical use of big data and AI in the digital finance industry.

Table 1. Results—Ethical Considerations in Fintech: The Intersection of Big Data, AI, and Privacy.

Table 2. Results—Navigating the Complex Relationship between Fintech and Customer Trust: Addressing Data-Privacy and -Security Concerns.

Table 3. Results—Bridging the Trust Gap: Strategies for Fostering Trust in the Fintech Era.

6. Future Research Questions

This study shed light on the intersection of big data, AI, and privacy concerns in the fintech industry and proposed strategies for enhancing data protection and security. Nevertheless, to deepen our understanding of the ethical considerations in fintech, several themes demand further exploration. Future research could delve into the complex relationship between fintech and customer trust, with an emphasis on addressing data privacy and security concerns. Second, a study could be conducted to examine strategies for fostering trust in the fintech era, such as corporate digital responsibility or adherence to data-protection laws and regulations. Additionally, the impact of cultural and societal norms on the adoption of fintech and the use of big data and AI in the finance industry could be promising areas for future research. By exploring these themes, researchers can provide practical suggestions for stakeholders seeking to ensure the responsible and ethical use of big data and AI in the digital finance industry.

Ethical Considerations in Fintech: The Intersection of Big Data, AI, and Privacy

1.1.What are the ethical implications of the integration of big-data analytics, artificial intelligence (AI), and financial technology (fintech) in the banking sector?1.2.How does the use of AI algorithms and big-data analytics in fintech raise concerns about privacy, fairness, transparency, bias, and the ownership and control of personal data?1.3.Which strategies and practices can fintech companies adopt to ensure the ethical use of customer data while harnessing the benefits of big data and AI?

Navigating the Complex Relationship between Fintech and Customer Trust: Addressing Data-Privacy and -Security Concerns

2.1.How does customer trust in financial institutions influence the adoption and acceptance of fintech services, particularly regarding data privacy and security?2.2.What are the main concerns and vulnerabilities associated with data privacy and security in online banking, and how do they affect customer trust in fintech?2.3.Which measures can banks and financial-service providers implement to address data-security and -privacy concerns, enhance customer trust, and promote the broader adoption of fintech services?

Bridging the Trust Gap: Strategies for Fostering Trust in the Fintech Era

3.1.How can organizations effectively implement corporate digital responsibility (CDR) practices to enhance financial performance and cultivate digital trust in the context of fintech?3.2.Which roles do transparency, integrity, reputation, and accountability play in fostering digital trust in fintech, and how can companies communicate these aspects through corporate reputation disclosures (CRDs)?3.3.What are the long-term benefits for organizations that adopt a culture of CDR and establish digital trust, and how can these benefits contribute to financial performance, brand equity, and marketability?

Ensuring Data Privacy and Security in the Digital Finance Industry: Best Practices and Strategies for Compliance with Data-Protection Laws and Regulations

4.1.Which steps can financial institutions and fintech companies take to ensure compliance with data-protection laws and regulations, specifically concerning the General Data Protection Regulation (GDPR)?4.2.What are the best practices and strategies for protecting individuals’ data in the digital finance industry, considering encryption, secure authentication protocols, de-identification techniques, data-governance frameworks, and regular audits?4.3.How can employee responsibility, accountability, and leadership practices contribute to data privacy and security within financial organizations, and which measures can be taken to prevent data breaches and unauthorized access to customer data?

7. Conclusions

The integration of big data and AI in the fintech industry has created numerous benefits, including individualized financial services, increased operational efficiency, and cost reduction. However, this study revealed that addressing ethical and privacy concerns is crucial to maintaining customer trust and confidence. To this end, the study highlighted several best practices and approaches, such as responsible data collection and usage, reliable data-security measures, diverse and representative data sets, transparency, and compliance with data-protection laws and regulations. These findings have important policy implications. Firstly, policymakers should continue to monitor and adapt regulatory frameworks to keep pace with the evolving landscape of the fintech industry. This includes updating data-protection laws and regulations to address the challenges posed by big data and AI and ensuring that compliance and enforcement mechanisms are robust and effective. Collaborative efforts between fintech companies, regulators, and consumers are essential for addressing ethical and privacy challenges. Policymakers should foster dialogue and engagement among stakeholders to establish common standards, share best practices, and develop guidelines that encourage responsible data usage and protection.

Financial education is another policy implication that emerged from this study. Given the vulnerability of younger generations to the privacy risks associated with online banking, policymakers should prioritize financial education initiatives. By enhancing financial literacy and increasing awareness of data privacy and security among individuals, policymakers can empower consumers to make informed decisions and protect their personal information. Despite the valuable insights provided by this study, it is important to acknowledge its limitations. The selection of relevant studies may have influenced the scope and generalizability of the findings. The analysis is also limited by the availability and accessibility of data on specific fintech practices and their impact on ethical and privacy concerns.

Furthermore, the rapidly evolving nature of technology means that ethical and privacy considerations in the fintech industry are constantly changing, and the findings of this study may become outdated over time. Additionally, research bias may have been present despite efforts to ensure a comprehensive and systematic review process. The research team’s choices and judgments throughout the review process may have introduced a certain level of subjectivity.

An awareness of these limitations is crucial for interpreting and applying the findings of this study. In conclusion, by considering the policy implications and limitations outlined above, policymakers, industry stakeholders, and researchers can work together to foster a responsible and ethically driven fintech ecosystem that prioritizes customer trust, data privacy, and societal well-being. Continued research and collaboration are needed to address emerging ethical and privacy concerns in the rapidly evolving fintech landscape and ensure the industry’s sustainable growth.